Building software that survives contact with reality, with Will Wilson

Today, Patrick McKenzie is joined by Will Wilson, CEO of Antithesis, to discuss the evolution of software testing from traditional approaches to cutting-edge deterministic simulation. Will explains how his team built technology that creates "time machines" for distributed systems, enabling developers to find and debug complex failures that would be nearly impossible to reproduce in traditional testing environments.

[Patrick notes: As always, there are some after-the-fact observations sprinkled into the transcript, set out in this format.]

Sponsor: Mercury

This episode is brought to you by Mercury, the fintech trusted by 200K+ companies — from first milestones to running complex systems. Mercury offers banking that truly understands startups and scales with them. Start today at Mercury.com

Mercury is a financial technology company, not a bank. Banking services provided by Choice Financial Group, Column N.A., and Evolve Bank & Trust; Members FDIC.

Timestamps

(00:00) Intro

(01:23) Database scaling and the CAP theorem

(08:13) Abstraction layers and hardware reality

(15:28) The problem with traditional testing

(19:43) Sponsor: Mercury

(23:16) The fuzzing revolution

(30:35) Deterministic simulation testing

(42:36) Real-world testing strategies

(47:22) Introducing Antithesis

(59:23) The CrowdStrike example

(01:01:15) Finding bugs in Mario

(01:07:37) Property-based vs conventional testing

(01:09:51) The future of AI-assisted development

(01:14:51) Wrap

Transcript

Patrick McKenzie: Hello everybody. My name is Patrick McKenzie, better known as patio11 on the Internet. And I'm here with Will Wilson, who is the CEO of Antithesis.

Will Wilson: Thanks for having me on.

Patrick: So a bit of context setting for the audience, asI know folks come here with all variety of backgrounds. Today is going to be talking about quite a technical subject—how we do software testing and fault discovery and how that has evolved over the last couple of years. But I will try to be the voice of the audience and make it comprehensible, and Will will correct me where my increasingly out-of-date engineering degree does not serve me well.

Will: Yeah. I will keep this grounded in real world examples. We can draw some connections to the non-software parts of the world, like financial markets or the airplanes you fly on or your power grid.

Patrick: Much obliged on behalf of the general infrastructure audience here.

So speaking about things in the real world that everyone has touched before, many of us use iPhones. iCloud runs behind a commanding majority of iPhones at this point. [Patrick notes: I don’t even know if you can completely opt out anymore.]

I think you and the team wrote the database that essentially orchestrates iCloud. Well, a successor technology of what you wrote orchestrates iCloud.

What people don't understand about databases running at scale at the largest companies in the world?

Database scaling and the CAP theorem

Will: Sure. So yeah, that's a great place to start. So my previous startup was a company called FoundationDB, which got acquired by Apple back in 2015. And basically in the early 2010s—I don't know how much of your audience knows about this—but there was kind of a fad for, let's say, really weird databases because essentially what you had up to that point was single machine databases that ran on a single giant computer. [Patrick notes: Ah, to be an engineer again. We were getting a new NoSQL database every few weeks!]

And, you know, they offered you SQL, which everybody or a lot of people knew how to use. But these had a problem, which was that sometimes a very, very big company needs to store so much data that it does not fit on one computer, or sometimes they need to do so many transactions per second that all that computation can't happen on a single computer.

And so lots of really smart people started trying to figure out how to write distributed databases. That is, a database that runs on multiple computers at the same time. The problem, or let's just say the thing that happened was everybody decided that it would be an awful lot of work to get that whole collection of computers all together, speaking SQL or acting like it was one computer, you know, for the benefit of the programmer or the person who's doing the query.

And there was a sort of vogue for making really, really weird seeming databases with really strange consistency semantics, meaning different people querying the database at the same time might get different views on the data.

[Patrick notes: This is perhaps not phrased in a way that would cause alarm. Consider a bank with an inconsistent view of how much money you have. It might debit your account $200 from a $1,000 starting balance and, after the debit, check your balance and see $1,000.

That is actually much more common than you would think, but we try to keep it rate enough such that e.g. the authorization errors that the bank has to eat do not e.g. bankrupt it.]

Will continues: And if that's a problem, well, sorry, you, the database user, need to reconfigure your business processes or you need to write really, really smart software that can figure out how to deal with that problem and give you whatever sort of level of consistency it is that you need.

[Patrick notes: How much of a non-starter is “reconfigure business processes?” Well, ask a bank “In how many ways, specifically, can you write an account balance?” and they will be unable to tell you. That is like asking the U.S.A. to count all the regulations. It could be done, theoretically, but the prospect of actually sitting down and doing it would take you years and you’d never find them all. And so clearly it is ludicrous to ask them to change all of them.]

Will continues:And the people who took this view were bolstered by an academic result that came out of MIT called the CAP theorem, which seemingly proved a conjecture by a really smart guy named Eric Brewer, who basically said that a distributed database—a database running on multiple computers—you can kind of have it be consistent, meaning everybody gets the same picture when they query it, or you can have it be available, meaning somebody can always query it, but you can't have both those properties at the same time because, you know, computers might get disconnected from each other and so on.

And basically what happened was people radically over-interpreted this result and decided that it meant all kinds of things that it didn't actually mean, and decided that it was an actual impossibility theorem, meaning that you couldn't build a useful distributed database that offered you a kind of single-machine-centric view of the world. And so they all gave up.

Patrick: Yeah, I will cop to having used the CAP theorem as a shibboleth a few times in my life, and it's interesting what engineering truisms get used as shibboleths and then repeated until they prove something that the actual paper didn't prove.

There's another famous paper in computer science. It invokes a diagonalization proof that essentially proves that a computer program can't always answer any meaningful question in the world. [Patrick notes: If a program can definitely answer meaningful questions that program definitely halts, and programs cannot be proven to halt, and therefore no program can definitely answer meaningful questions. If you want to read up more on this, the search term is the Halting Problem.]

Thankfully, nobody believes that gloss of that result, because they observationally do answer meaningful questions. Our entire society is based around this fact! And so the fact that they're theoretically incapable of doing that just passes by the wayside and no one says, oh, AI is impossible, and web browsers are impossible, because computers can't answer meaningful questions.

Will: No, totally. There exist impossibility results in AI as well. Which I mean maybe we'll get to that later. It turns out they don't mean what people think they mean.

Patrick: Hopping back a few decades for people who didn't cut their teeth on SQL, SQL is a fantastic technology. We've had it since the mid 1970s. Fundamentally, it was built for a world wherean enterprise's amount of data could be bounded by “things that are achievable by humans typing into machines.”

And the reason the largest enterprises in the world have much, much, much, much, much more data than we had in 1970 is because we are recording data that is generated by computers about interactions—interactions of users and computer systems, interactions of computers and computers. It is no longer rate limited on typing speed and number of humans in the world. [Patrick notes: It also can come from sensors, most notably cameras. Every time you snap a picture you add more data to the world than you will personally type in months.]

And so the amount of data that a Google or an Amazon needs to store is greater than the entire population of people capable of typing in 1970 could produce, given all of their time devoted strictly to typing into the computer.

Will: That's right. And the rate of updates is potentially much higher as well, because the updates are being generated by other computer systems which can write data very, very fast.

[Patrick notes: How often was an account balance at a bank updated in the 1970s? A few times per month, typically. These days banks probably write server logs to databases (&tc) which record every time a robot views any of their web pages, which is potentially thousands of times per second. For one not-very-core use of the data systems.]

Will continues: Yeah. So the CAP theorem actually is a pretty interesting example of the production function of academic knowledge or something. You know, when Brewer conjectured the theorem, the CAP conjecture that he came up with, it was a very, very interesting question, very interesting hypothesis, which I think my hot take is that it's been proven false now.

And then when this conjecture got formalized into a quote-unquote theorem, what happened was that the academics involved in that effort, who are very smart people who I have a lot of respect for, they defined the terms in an extremely narrow, extremely specific way, which made it a possible thing to prove. But the theorem they proved was semantically actually quite different from Brewer's original conjecture, even though it used all the same words.

And so there arose this terrible ambiguity of what has actually been proven. And, you know, I would argue that the existence of systems like FoundationDB or like Spanner or others is sort of proof that's not what they thought they proved.

So anyway, there was a vogue back then that was basically like, we're going to make you, the user, do all the hard parts of having a database. FoundationDB was founded on the principle that, no, we can have our cake and eat it too, right? We can make a database that scales across many, many machines very gracefully, works the way you expect intuitively a database to work, handles all of the conflicts, handles all of the "well, what if this machine is down at the moment you ask a question, the question goes to other machine instead, and the other one comes back up."

Right. All of that stuff handled for you under the surface. Nobody needs to notice.

Patrick: And one of the reasons this is important, particularly at the scale of the largest enterprises, is that at the scale of large enterprises you have to be aware that people make mistakes all the time. This includes people that have engineering degrees and are employed as, say, you know, junior programmer in the office in Brisbane. [Patrick notes: Or staff engineers in San Francisco.]

And you can't have the property of your system where, yes, data in our database, quote-unquote, is reliable as long as every junior engineer in Brisbane understands exactly the properties of the distributed database system that we are providing them with.

Abstraction layers and hardware reality

Will: Yeah. That's right. And so long as all of the network switches between these computers behave correctly, and so long as the sysadmin in the data center doesn't unplug a cable at the wrong moment and dot, dot, dot, right, there's weird stuff that can happen in the physical environment that computing happens in as well. And the mark of a good computer system is it should hide that from you. Right? You should not have to care whether the electrician at the data center is doing his job correctly.

[Patrick notes: I am glad I am not the only person who verbalizes the ellipsis (dot dot dot).]

Patrick: And a bit of ancient lore for computer programmers at this point. The genesis of the word “bug” in computer programming was that, I think it was an admiral in the US Navy observed that root cause analysis for a failure of a program running on a mainframe was, there was physically an insect that got into a particular mainframe and caused an electrical short. [Patrick notes: The story is somewhat more complicated than my verbal gloss on it, but at this point it is folklore more than history.]

Similar things, you know, backhoes severing connection lines, literally cosmic rays corrupting databases, happen all of the time at scale.

And so we have to be robust against the world of atoms, even when we're theorizing about the world of bits.

Will: That's exactly right. And it's actually even stronger than that. The more distributed your application, the more you have to care about the world of atoms. Because the more the world of atoms cares about you. If you are running a program on one computer, it is maybe vanishingly unlikely that a cosmic ray will hit that computer at the wrong moment.

If you're running your program on a million computers and they're all working together, the odds of a cosmic ray hitting one of those computers is now a million times greater. And there's other kinds of weird things that happen in the world which actually are more likely to produce correlated failures. If you get a bad hard drive in one of those computers, there's a pretty good chance there's a second bad hard drive out there, because bad hard drives come in batches.

And that supplier who sold those hard drives to the data center probably sold them a whole batch which had a higher than average failure rate. Right. And so all of a sudden, all these questions of—it's actually very similar to the kinds of things that risk managers at hedge funds have to worry about, like correlated market moves and stuff like that.

Patrick: Or even correlation between employee behaviors that yadda yadda. The hard drive failure at scale is an interesting problem. People, consumers, let's say I think of the hard drive either—these days they're fairly reliable that you are unlikely to observe a hard drive failure in your lifetime with a particular computer, which I think was not the case when some of us who might be a little bit older were using computers in the 90s or 2000s.

The way engineers reason about hard drive failures used to be, back in the day, that they had a rating from the manufacturer. The mean time between failure—MTBF—was calculated in the QA department lab, which ran them with sample workloads. The manufacturer would rate this particular model as—or even this particular model sold into this particular channel as having, let's say, I don't know, 8000 hours of continuous use between on average for failures, which means some of them might fail after 1200 hours, some of them might fail immediately on arrival.

[Patrick notes: The world of atoms is complicated. We often have the perception that parts with the same name are equivalent. They extremely are not. And you could, hypothetically, have a line to produce a part, and you might test every part coming off the line (or a sample periodically) to determine how up-to-spec they were. And if you got something which was a little out of spec, but not flamingly out of spec, why throw it away? Just sell it to someone less discerning about exactly keeping to specifications.

Which is one reason retail hard drives and commercial hard drives, even when they look identical, are not the same animal.]

Patrick continues: But that will be balanced out. And so you, the person who is buying 10,000 hard drives, you just have to math for less. And then the thing that the hyperscalers found out when they started employing 10,000 hard drives at the time is, oh, that average summary statistic is a dangerous one, because sometimes it is the case that the lab didn't do anything unethical. The number is the number. However, you know, this particular batch produced on Tuesday at this particular plant will all fail at 500 hours on the dot.

[Patrick notes: Failures are generally randomly distributed except when they aren’t. For example, a programming bug on the Patriot missile made them get increasingly inaccurate the longer they were kept on, and once they were in the field it was extremely difficult to fix, and so the remediation was “Periodically reboot your missile.”]

If we had a deployment topology where a particular American insurance company was buying 500 hard drives for us, that all happened to be the Tuesday batch from that particular plant, they'd be a little pissed off. And so we have to be robust against, you know, having potentially a shipping container full of hard drives all going out within a minute of each other.

Will: Yeah. That's completely right. And there's actually other ways that the math is deceiving too. So one that I recently learned about is a physics reason.

[Patrick notes: Will is going to use a word, hyperscaler, which is a term of art in the industry. It means, essentially, one of the set of Amazon AWS, Microsoft Azure, or Google Cloud Platform, possibly with an honorable mention to Alibaba or Facebook. The hyperscalers host workloads for businesses of all sizes, from my personal website to basically the entirety of the Fortune 500. Each is so much larger than the server fleets of “businesses which are the largest in the world, but aren’t hyperscalers.”]

Will continues: When you're a large hyperscaler buying hard drives, you really want to maximize your hard drive density because you want to reduce the power requirements to store a given amount of data, and you want to have each physical compute node able to access the most data it can. That makes it easier to balance data across places, right? And so what you really care about is drive platter density. But the denser you make the drive platters, the closer the magnetic grains get to each other, the more likely it is that the magnetic read/write head when it comes to read or write a piece of data actually flips an adjacent magnetic grain as well.

And so basically, the odds of disk corruption increased super-linearly with the density of the data that you're storing, which is this really weird intrusion of the messy world of solid state physics into the beautiful platonic realm of computer science. Right? And but this is actually a thing people have to worry about, and it can cause serious problems.

Patrick: Yeah. And one of the fundamental things that computers offer their users, that engineers offer their customers, and that the companies providing these infrastructure services supply to their customers is the world is a messy place. Physics doesn't care what you want.

But we're going to wrap that in an abstraction layer. And to the maximum extent possible, you don't have to care about the things that are below you in the abstraction layer. And then the joy and terror of engineering is that those words, “to the maximum extent possible”, have 15 asterisks on them. But it's a true fact about the world that the typical junior programmer working at American Insurance Company who is writing something to this cloud, and without loss of generality, Amazon, doesn't care that much about power distribution at Amazon data centers or how dense their disks are packed together.

Will: That's right. And I think this is a big part of why FoundationDB became so popular and why ultimately Apple bought it, and why ultimately they open sourced it and now everybody else is using it too. It just does actually do a very good job of hiding a broad class of such considerations from you. You know, we had a demo back in the day where we literally rigged up a little mini baby cluster of five computers and put them, you know, we'd bring this out at conferences and then we would just have power switches in front of each one, and the network cables right there.

And we'd invite people to come in and just turn things off, or unplug cables and plug them back in and plug them back in at different places. And it was a really compelling demo, because no matter what you did, it just kept chugging along and didn't make mistakes. And that was so far outside of anybody's experience back then that, you know, they were like, oh my gosh, this is going to save me a lot of trouble.

Patrick: Finding and detecting trouble is an unsolved problem in computer science slash unsolved problem in management science as well. But we continue getting better at it over the last couple of decades. I'd love to talk about what Antithesis does, but I think it might be useful context setting for people to talk a little bit about fuzzing first. Do you want to go in that direction?

The problem with traditional testing

Will: Yeah, I can do that. So let's first take a step back and talk about how do most programmers think about software testing, QA, and finding trouble before it hits you in real life and I'm going to describe it to you in as fair terms as I possibly can. And you're still going to think it sounds crazy.

And that's because it is crazy. So software is a highly complex system, right? It has emergent complexity. Software artifacts, even pretty basic ones, are among the most complicated things people have ever produced. Right? And so whenever you've got something really, really complicated and it needs to interact with the real world, right? And with physics and all these ways that we've just described, what happens is behaviors emerge, which nobody designed and which nobody expected and which, you know, bridges have resonant modes that can cause that to come and arrows bridge to fall down.

And, you know, all this weird stuff happens that you didn't realize that, oh, when this particular O-ring gasket goes below a certain temperature, it's going to get brittle and then it's going to the space shuttle is going to explode, right? So much of the really bad stuff that happens in the real world, both in software and hardware, comes from, I think, what finance people would call Knightian uncertainty.

It's model error. It's not like I was wrong about the tolerances of this part. It's there was this interaction between these two parts that nobody ever thought of or nobody ever saw, or this interaction between this one part and the world that nobody ever thought of and nobody ever saw.

Patrick: And if I can interject for a moment here, the uncertainty starts coming in at very, very early. Even if you construct toy models of programs, you are already past the point where you can reason over the entirety of what those programs could do.

So finger to the wind for people, imagine Twitter as a program just sitting in a box. And if we still have 140 characters, and you just want to have a programmer go through and exhaustively test that none of the possible tweets break the Twitter box, that's already strictly impossible, because the number of states Twitter can be in is greater than the number of atoms in the observable universe.

Will: My favorite example of this is actually Super Mario Brothers, which is the original Super Mario Brothers from 1985. That was a very, very simple game. But as you say, the number of states of that game far greater than the number of atoms in the universe, and it is actually quite easy to find bugs in even that very, very simple game. You can clip through walls, you can do all kinds of crazy stuff, because there were scenarios that the makers of that game never envisioned, and they could never possibly envision them because there's just so many.

Patrick: And I think with the disclaimer when you say it is easy: it was not easy as of the development of Super Mario Brothers to find these things.

The state of the art in testing software back in the days was you hired a bunch of people to “get paid to play video games.” [Patrick notes: This is, at every video game company ever, the most disingenuous pitch for employment of all time, because software testing does not feel like play. At all. It is miserable, repetitive, assembly-line style work, and a career dead end in the bargain for most people hired to do it.]

Patrick continues: You sat them in front of a development machine with the disk inserted. And then they perturbed buttons on their controller really, really fast and then wrote down paper log sheets and maybe those paper log sheets got passed into Excel by somebody later in the day. We got somewhat better at software testing over the years.

I shouldn't undersell decades of intellectual accomplishment. We got a lot better at software testing over the years.

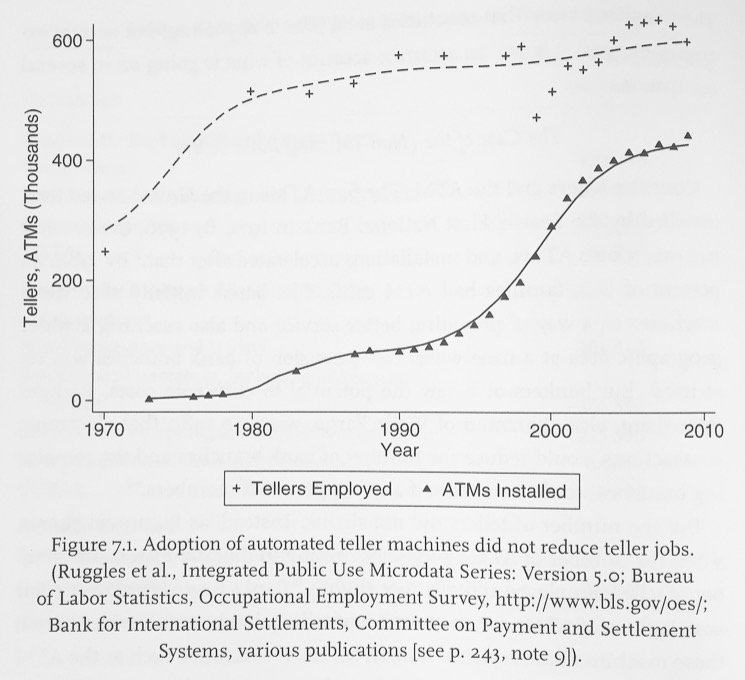

But it still remains hard. And so some of the things that we have developed in the intervening decades were automated testing, where an engineer evaluates or enumerates and suggests, okay, I understand where this program is likely to fail, I'm going to ask a computer to use those failure states, potential failure states, and report to me whether the program works or not.

And then there will be no more bugs in software ever again. And this will be wonderful. Why didn't that work?

Will: Yeah, right. What could possibly go wrong with this idea?

I have this emotionally complex system with all kinds of behaviors that no human being could ever foresee, and so the way I'm going to guarantee it works correctly is by sitting down and thinking really hard and thinking of all the problems that could happen.

And then I'm going to write a test to cover each one, right? It sounds great. It doesn't sound like it's going to work. It's like saying, I'm going to test a plane, not by flying it, right, but by sitting down and looking at the blueprints and being like, oh, okay. I should make sure that if I wiggle this, that thing happens, right?

And if I just do that enough times, I will eventually have a safe airplane. But of course, as soon as I put it in those terms, it's obvious. That will never, ever, ever give you a safe airplane. At some point you need to actually put it together and fly it and ideally fly it in many different weather conditions.

And probably there's going to be some pretty scary things that happen. And then maybe if you do that enough times and get enough experience, you will have a safe airplane. But that concept came very, very late to software. Even though software has many of the same problems.

Patrick: And we have management science, which is predicated on this incorrect conception of software being not merely correct, but this is the way we should organize our industries and lives around this lie. For example, so-called “waterfall” development: as part of the software development process, first we're going to write requirements document. I's going to exhaustively specify everything the software will do.

And then as part of the testing process, before we write the software, because we don't want those engineers in the room for this one, we are going to read the requirements document and then write all the ways that the software can fail. And then we are going to put them all in an Excel file. And when we get the prototype of the software, we're going to go through our Excel file and go through manually 12,000 different ways the software could fail.

And when we observed zero bugs at the end of that, then software has no bugs.

Will: Right.

Patrick: And this sounds like a parody. But if you work in government contracting, not merely will you be asked to do waterfall software development in 2025, it's literally the law in some places. In those places, the only way the government can buy software is by embracing this parody level understanding of how software works.

Will: Well, the crazy thing is, it's actually not just the government. Even very sophisticated software development organizations have imbibed a version of this, and it's actually pretty deep in their DNA. It's sort of how everybody thinks about it.

The fuzzing revolution

You mentioned fuzzing, right? So for the listeners who don't know, fuzzing was kind of an amazing intellectual breakthrough that happened a few decades ago, which was mostly amazing not because it's a genius idea. Amazing because it's such a dumb idea.

[Patrick notes: We have a rich history of this sort of thing in computing. A recent example is the bitter lesson in AI scaling, which is that no theory of human cognition or team of PhDs working on algorithms was nearly as effective at achieving AI gains as just writing more zeroes on your check to Nvidia.]

Will continues: And yet it was so powerful. And that really shows just how bad the status quo was, right? The idea of fuzzing is, hey, why don't I just throw completely random garbage into my software program and see what happens? And it turns out that if you throw completely random garbage into a software program, pretty much any software program, it immediately crashes.

And so it's oh, all of your 12,000 list item checklists and your carefully crafted automated tests they're all worth the paper they're printed on, because as soon as I literally threw random garbage in, I found a new situation. I found a new scenario that none of you were thinking ahead of time and covered. And that was it was a striking kind of humiliation for the way that people had developed software.

But, you know, these days it's become absolutely standard practice for anything security critical, for instance, and it's really actually changed how people do stuff.

Patrick: I think humiliation is kind of the right word. The software industry has oral folklore in a lot of cases, even more than it has research results in papers and academic conferences and, you know, the equivalent in industry. And there was, for example, oral folklore that, "many eyes makes all bugs shallow." And so an arbitrarily well distributed open source program is extremely unlikely to have security bugs.

It was widely believed that: we will find a few occasionally, as a result of intense effort on the researchers, but, you know, no one could design a program to find bugs at scale in a system like, say, the Linux kernel. Thousands of geeks had been over every line of that.

To give a particular person some credit for an intellectual achievement, I think, Michael Zalewski, if I'm remembering his name correctly, back in 2013, just released this program that would do fuzzing based on an image of a rabbit.

Will: AFL. [Patrick notes: American Fuzzy Lop, a breed of bunny.]

Patrick: You pointed AFL at arbitrarily important code and clicked go. And it would start reporting crash bugs and memory corruption errors. And, you know, all the list of the baddies that we had back in the day. [Patrick notes: Those two are particularly important issues because they are weak evidence that there is a security vulnerability in close proximity to them.]

Will: That's right. And AFL basically made one very simple change to how fuzzers work, which was a tremendous leap forward. AFL just said, hey, look, we found all this stuff by throwing totally random garbage into programs. Let's just make it slightly less random. Let's feed in some random garbage. And this is not a black box to us. It's a computer program. We can see which lines of code run. And so then let's have a genetic algorithm. Let's use evolution. If a new piece of random garbage makes more lines of code running the program that has a higher evolutionary fitness, and we're going to make more garbage that looks like that piece of garbage. And he defines the reward function from new lines of code to how fit are you?

And he defines a number of what he calls mutations, which take some random garbage and add some stuff to the end, or chop off some stuff from the end, or change a one to a two or whatever, and you just run that for long enough and it's exponentially more effective than pure garbage. And so that was yeah, that was sort of the second generation of fuzzers and has spawned a huge number of imitators ever since.

Patrick: My understanding and feel free to correct me if I'm wrong, was that there was an intermediate generation of fuzzers. We started with throwing random garbage or throwing this list of random garbage that we know exercises bugs in a lot of programs.

Generation 1.5 was, okay, we're going to train machine learning models, of which genetic algorithms are one type. We will use them to generate a bigger list of garbage. We will then throw the garbage against programs without knowing the internals of those programs.

And so the one big insight is I can't know the internals of the programs, but I have source code available. And because I have them actually executing here and can observe things happening in memory. And so I'm going to use that knowledge, plus these genetic algorithms, which, interesting convergence, genetic algorithms were present enough in the literature that they were covered in my undergrad CS course in 2004. I, a much less accomplished programmer, used them to control ants in a simulation in the mid 2000s. [Patrick notes: At least one team got a paper out of a similar idea.]

And then, you know, fast forward ten years later and then they get implemented it into a research result that immediately gets productionized by the largest firms in capitalism, because AFL was a stunning achievement in the state of the art.

Will: Yeah. Most things get invented many times. And I think this is true of fuzzing as well. So another time that it got invented right around the same time actually was called property based testing. I don't know if you've heard this phrase, but the idea behind property based testing is you know, rather than have, let's say, a test, an example based test where I insert one into my database and then I search my database contains a one, and then now I have a test, right?

Instead I write a property which is a more general specification that says, hey, I can insert numbers into my database. If I insert one, I should then be able to find it afterwards. And now I can run that with any number and I can run it many times. I can run it concurrently. I could add more operations.

And this idea basically constructing a model or a set of invariants or constraints on what my program must do, and then being able to randomize a set of API actions. And if you think about it, the sort of the use of randomness, this idea that we're trying to check some final property at the end in the fuzzing case, it's did I crash in the case it's did I do something wrong? Right. It's very it rhymes.

It just came from a totally different community. Fuzzing came from security researchers mostly. And property based testing came from the Haskell world. And you know, they never talk to each other. And so they just sort of invented all these ideas again.

And they invented many of the same tricks and using coverage as a form of feedback and evolutionary stuff and ML and sort of it just sort of happened again, which I think is so interesting and, you know, it's a thing that actually happens quite often, you know, in most disciplines, I think.

Patrick: I think we have this is every paper about interdisciplinary studies ever written, but a failure to communicate between smart people that do different things. This is their primary day job. And then over and over again in the tech industry, for some value of the word tech, we have roughly simultaneous invention of different concepts or, you know, a person in the financial side of the technology world failed to read a CS paper because they don't spend much time with CS papers and therefore, many, many tens of millions of dollars was spent at a large firm in developing something which is on undergrad curricula in a different part of the building.

[Patrick notes: A/B testing had a huge heyday in startup circles for about a decade, including with me, approximately 200X to 201X. Readers Digest was doing split tests with holdout groups back in the 1960s, and there were less sophisticated versions in production decades before that.]

It is fun when we get the transplanted ideas across industry and then are immediately able to operationalize them or weaponize them, to use the term sometimes used in the industry, to great effect in actually making everything safer or make it more robust, etc.

Deterministic simulation testing

Will: Right. So, so now joining together the two stories that we've been telling here, fuzzing got reinvented a third time and it was at FoundationDB. And the key insight that we had at FoundationDB for one key insight was people are basically trying to test for all kinds of reliability properties or fault tolerance properties, the old sort of example based way.

For example, I want my database to keep working. If this network connection drops halfway through a transaction. That sounds very reasonable. Right. And so the thing that 99% of developers do is they write a special test that tries inserting some value, and then 45 milliseconds into the transaction drops the connection, and then they check and make sure that everything worked great.

What have you now proved? Well, you've proved that under certain circumstances, if you drop the thing 45 milliseconds in, you know the thing still works sometimes. Maybe,

you have not proven that it works if you drop the connection 44 milliseconds in or 46 milliseconds, and you've not proven that it works, if you were writing a different value at that moment and you've not proven that it works, if somebody was walking by the computer and gave it a shove at that moment and, and so on, and so forth.

And so actually, it turns out that pretty much every kind of "my computer effectively hides physics in the real world from me, beneath a layer of abstraction" type properties that you might want is a property that can really only be checked via this more randomized, constraint based testing approach. Right? What you want to say is, if I'm writing a value, you can drop the connection at any point and it will work.

And then I want the computer to go try and do it it a whole bunch of different points, so that I believe that this is actually true in general, not just the one that I happen to pick.

Patrick: And you want to be able to say the requirement which is in many RFPs and similar. And also the system should be robust to hardware failure, which is one sentence too. Right. But it's not one sentence to test anywhere in the world because, there's an infinite potential universe of potential hardware failures and scenarios somewhere.

Will: That's exactly right. And so that basically at FoundationDB, we developed a new style of testing, which speaking of parallel discovery, may have actually been invented at AWS the exact same moment that we were inventing it. We had a podcast recently with Marc Brooker over there about that.

But basically this new style of testing was called deterministic simulation testing. And you can think of it as fuzzing for the world. Right? Instead of fuzzing the inputs to my program, I'm going to fuzz the environment in which my program runs. I'm going to try a whole bunch of different random conditions—not random “What did I do?”, but random “What was happening while I did what I did?”

We're going to try running the transaction with network connections dropping and all these different moments and different combinations of machines failing and different combinations of hard drives failing.

And, you know, every sort of not just everything you can think of that could happen, but everything you can't think of that could happen. We're just going to create random generators for real world chaos, and we're just going to keep our computers crunching and simulating what would happen to the database and all these different situations until we find a case where it does something wrong.

And then the beauty of the approach is a real challenge, usually with this style of testing is because correctness can be so heavily timing dependent, right? Sometimes, a computer algorithm or process will work every single time except for 1 in 1,000,000, because in that one a million, this thread gets slightly ahead of the thread and you can't exactly know ahead of time what the crucial condition is.

That's especially true in distributed systems. But the thing about deterministic simulation testing is because all of these failures are virtual, and they're happening in this simulation that you've constructed of your software, they're also perfectly repeatable and replayable. So rather than discovering one of these weird 1 in 1,000,000,000 failures in production, which might not sound like a big deal, except if you're Facebook, 1 in 1,000,000,000 is happening many times per day, right?

But it will definitely not happen while you've got your microscope out and you're looking for it. So instead of having to deal with that and to work backwards and try and figure out, oh, what were the conditions that caused us to happen? You have it in a time machine. You have it trapped, you know, with your YouTube scrubber and able to go back and forth and look at exactly what went wrong.

And so it just makes it a drastically more productive environment for figuring it out and for making systems really solid. And this was the secret behind how FoundationDB got so good. And it's now a technique that's escaped containment. And it's sort of spreading through the industry.

Patrick: Yeah, I think a few years ago, Patrick Collison, who runs Stripe, which I previously worked at, lamented that the standard way that we do software development is not time traveling debuggers everywhere.

Like every other technology, it had to be invented. And you folks had a particular deployment of what is essentially a time traveling debugger for testing databases. And it helps sort of close the loop between we are able to speculate with high fidelity that the program will have failure in cases that rhyme with this one.

Now, you software engineer, get that report and you have to do something about it sometimes doing something about it is. Yeah, that'll never happen. And I think we've all been bitten by that one once or twice over our careers, but sometimes it's okay, I have to go back and make my code more robust or my deployment environment more robust, or include countermeasures against this, you know, particular source of corruption, etc., etc.

And essentially the software is making the engineer and the system the engineer is embedded in much, much more efficient at going through this loop versus the logs. Say something happened. The state in the database is after I have spent three hours looking at it, not what I expected it to be. Where across this artifact that is literally more complicated than the space shuttle is the problem. And ideally, I can solve that with myself and a small team of people and a budget of 12 hours

Will: There's actually another way that it makes you vastly more productive. I think what you said is 100% true, but there's actually another one too, which is the latency between when you introduce the bug and when you find it has a huge effect on how productive you will be debugging it. Right? If you introduce a bug and it is immediately found, you get a red squiggly mark in your editor or whatever.

You can solve that bug in one second by pressing Control-Z. If you introduce a bug and it's not found for six months or 12 months, and it's found in production, and you know, by that point you may have left the company some other guy is now debugging it. He has no idea how your code works. Nobody remembers what was being modified there. You know, nobody remembers the context of the change that introduced the bug. That's going to be orders and orders and orders of magnitude harder to debug.

Patrick: Plus the environment, the bug is found in, it gets increasingly removed from the one that is created in. And so if you find a bug in your own code the day you are writing it you have—it's not necessarily the case that you have written that bug. It could be, you know, literally a flaw in silicon that was laid down many years ago. It could be a bug someone else at your company created 12 months ago and you were the first person to exercise it. But, in terms of Bayesean evidence, it's probably you. You can use the fact that it's probably me, and it’s probably what I'm doing right now, to quickly locate it.

Whereas if 10,000 developers work at your company and they're, you know, all cranking out doing multiple deploys a day, then there might be hundreds of thousands of code changes to step through12 months from now to figure out, okay, which code change was the one that introduced the issue.

We have some fun tools for this.

One of the most interesting moments in my life as an engineer was being told about git bisect, which is this fun little thing that you can get source control programs to do to. If you write a minimum test case which can exercise a bug, and that's no small accomplishment, magic software can find where exactly in the timeline that bug was introduced and pinpoint it to the minute. That it's not primarily used for “Who do we fire now?” but is primarily used for directing remediation efforts.

Patrick continues: But finding the minimum test case is hard.

Will: Absolutely. And the way I would put this is that basically really powerful robust testing which to your point is able to consistently and quickly find a particular issue actually makes debugging in the sense of root cause analysis. And detective investigations through log files and data files and, you know, forensic analysis, it actually just makes all of that not happen.

It actually makes it incredibly rare. And that's such a gigantic productivity boost for a team that people, often people who have lived in one world and not the other, often, I think, don't actually understand what the other world looks like. It's you know, there's one world where your job is software engineer. 90% of your time is being spent trying to figure out what on earth is causing something to explode in production.

And then there's another modality where, effectively, 0% of your time is doing that and you're just going 10x faster and you're I think these worlds both exist out there in parallel, sometimes even within the same company. And people, just if you've only ever had one, you just have no idea that the other one is occurring.

Patrick: And a factor, which I think is less true at the tech majors, although still happens at the tech majors. But is extremely observed in many other places in the economy which employ lots of developers finance, is often the issue is not, strictly speaking, with you. The thing, the part of the broader quote-unquote system that is causing the issue is one that is at a counterparty or and somebody else's computer, etc., etc., etc.

And there's a saying in software engineering community: a distributed system means a computer that you don't even know exists can blow up your machine/program/business/life.

But when you don't have full visibility into the full state of the system, and when you don't necessarily know what is the business process that ran prior to me getting this data file that caused, you know, this later API request that I made, to have an error in it. Then the forensic reconstruction, very literally, sometimes involves forensic accountants. And, your job cannot be that satisfying or fast, when literally doing software development requires bringing in the forensic accountants to figure out what went wrong, which sometimes you have to do because the thing that went wrong, it's not simply oh, you know, a cat photo on Facebook got corrupted and someone had to hit Control F5 to see the cat photo again. It's no, people lost money over this. Or no, the computers run the world we live in now. And the upper bound for bad things happening in the world is not people lost money.

Will: Yeah. No, you're completely right. And this is actually a super, super interesting topic.

Real-world testing strategies

I think that when you are faced with this kind of situation, right, the conservative choice as a software developer is to assume the worst possible behavior on the part of your counterparty. And the problem and the reason why this often doesn't happen, including within organizations we basically, we had a saying back at FoundationDB, which we've also carried over here, which is that all observable behavior of a system eventually becomes part of its interface.

Meaning you know, let's say that I have an API, and my promise to you is the API will always give the right answer. I do not promise that the API will always return an answer, or will always return an answer promptly. But in reality, I'm a good engineer and so I've designed this API and it actually works really well. It does always return the answer promptly. You will naturally grow to assume that that is part of its contract and part of its design behavior, even though it's not, even though it's not something you should count on. And so your code will get less robust as a result because it will start to make the same assumption. And so the solution to this that we came up with was something that we call bug-ification.

And what bug-ification means is basically, if I am writing an API, at least when I'm running in test, maybe also when I'm running in production, if you like to live life on the edge occasionally probe the outer limits of what I'm allowed to do. I know that I can always return to you within five milliseconds, but I've only promised to you that I'm going to get back to you within a second.

So occasionally, deliberately just delay for 900 milliseconds and then send you a response so that you will not come to count on. My response is taking five milliseconds, and this can be done sort of at every layer in the system. And if it's done well and done thoughtfully, it results in a much, much more resilient system. Overall.

Patrick: Can I give you an example of something that does which is analogous to this? And I'll preface this example with I'm not speaking on Stripe's behalf. I no longer work there. And my technical understanding might be somewhat out of date.

People often ask why credit card failures happen in the real world. I have a valid card. It definitely has money available on it. I tried to buy something. The website said, my bank rejected the transaction. Why did this happen? And for many, many years, the financial industry's best answer to this question was, we don't know, gremlins. And the reason that all the king's horses and all the king's men didn't have a better answer to that than gremlins was the financial industry is not one computer operating in one room where we can just inspect the code and find the bug. It's an ecosystem of computers talking to each other. And somewhere inside that ecosystem, something went wrong, but we're not exactly sure why. And so the thing that Stripe does is for retries, where you know, the first thing to do, similar to the case of losing a photo on Facebook, just hit refresh and see if it works.

So the first thing you do, if you send a credit card transaction to the ecosystem and it comes back no is to say, all right, let's just try it again for some very, very small fraction of retries, which is in absolute numbers because Stripe does more than $1 trillion a year in volume these days, a very large number of retries say, okay, I'm going to make some semantically neutral edits to that transaction that it just tried to do.

So the transaction is fundamentally this tweet-length thing that you ask, you know, the world to do for you and say, all right, if I was to phrase that very, very slightly differently, it shouldn't change what I'm asking you to do. But maybe it will exercise a different path through a particular bank that this transaction is going through.

And you run that loop many, many millions of times. And eventually you learn quirks about various financial institutions. And so it might take a team of historians and system engineers looking into a particular financial institution to understand why is it the case that you really, really hate when zip codes in the United Kingdom are down cased versus being uppercase, which is how they are traditionally rendered?

No set of humans should ever have to ask that question. In the real world, a computer can just learn over time, I had millions of bites of the apple. And this is just one of the apples in the orchard that I found. If I'm working with this particular bank in the middle of Kansas, uppercase all the British zip codes. No one has to ever find it.

Will: Fuzzing, but you're fuzzing your way to victory and to a transaction completing successfully rather than trying to cause a crash.

Patrick: Right. And that is exactly the end point. What could transactions get approved versus declined where we have retrospective knowledge that transaction is good because when the transactions are bad, when it should have been blocked, when it actually was fraud, you hear from somebody later, nope, that was fraud. So you, you know, run this experiment not merely running it millions of times, but running it over time, and then get to iterate towards the, you know, full shape of the complexity of the financial universe. Anyhow, that's my fun fuzzing in the real world story.

Will: That's super interesting.

Patrick: So, Antithesis, we have this notion of a time-traveling debugger, which had to be invented, all good technologies have to be invented. But just this is a more complex and better than simply having a time-traveling debugger that works on just the code that you wrote on your own machine. Can you give people a little bit of an explanation why?

Introducing Antithesis

Will: Sure. So, when you are trying to test a piece of software that just runs on your machine, it is relatively straightforward in some sense. I mean, we've just spent, you know, an hour talking about how not straightforward it is, but it's actually quite straightforward relative to the other possibility, which is that it involves other people's computers or even just other computers that are also under your control, because when there's a whole bunch of different computers, suddenly it becomes, well, number one, it becomes impossible to say to this entire system, please make the exact same set of events happen again.

Because computers are very complicated things, their real world hardware, literally what temperature they are and what humidity is in the data center can result in operations executing in a slightly different order. You know, the time that a packet takes to go through a network can take different amounts of time, different times. You did it. And if some bug, some really catastrophic bug requires some really quite specific set of circumstances to manifest.

You may never be able to get that to happen on command. And so time travel debuggers which have existed, you know, for 20 years for a single process running on a single computer, were long believed to be completely impossible for multiple computers.

So Antithesis basically completely fixes that problem and gives you a fully reproducible, fully deterministic environment that can encapsulate many computers and many processes. And real world, big, complicated, all, you know, crafty software written over decades, that's spanning some, some crazy organization.

And lets you take that whole thing and boil it down and run it in a totally reproducible way. And this can be used for highly efficient debugging, and it can also be used for finding the bugs in the first place.

Because, you know, the other bad thing about having many computers that are doing things a little differently every time is it's basically impossible to fuzz them, right? It's basically impossible to do any of this stuff. Well, because you, you yourself said, right, you've got some machine learning model that's trying to learn, oh, when I give it this input, I get this result and I've made something happen.

But if if things can happen differently on different trials for completely random reasons outside of your control, with nothing to do with what you did, how can your model possibly learn anything? Right? And so by taking all of the complexity and noise and chaos of the real world and turning it into a fully controllable, fully observable simulation of the real world, we both solve the debugging problem and we find the find all my bugs really fast please problem.

And generally our customers approach it in the opposite, right? First we find their bugs really fast and then we give them a super powered time machine for debugging them. And so, you know, it's basically what we're trying to do is we're trying to bring some of these new software testing paradigms, which are, let's say, a little bit more empirical, a little bit more take the airplane out there and fly it and see what happens.

And we're trying to bring them out of production into test, right earlier in the development lifecycle, so that you're much faster and more efficient when you solve the issues. And we're trying to bring them within reach of, you know, every organization that builds or consume software, not just the very most advanced, sophisticated people who live and breathe the stuff.

Patrick: And so just voicing over that for a minute. Production versus test are two different environments that software might run in. And a practice of the most sophisticated companies running software at global scale for the last, let's say two decades has been we do quite a bit of ongoing testing, but the actual user hitting software in the real world, and seeing the bit flips caused by cosmic rays and the backhoes hitting internet connections, it's the only thing that exercises the software to the degree that it happens in the real world.

So we're going to tighten the loop between getting those values in the real world and surfacing them to engineers in some sort of facilitated fashion.

Will: That's correct. Although we actually think that even that is too much of a concession to the old bad way of doing things. You know, it's better than waiting for a real backhoe to hit a real fiber optic cable is let's have a simulated backhoe hit a simulated fiber optic cable. A million times a second in a simulation running on your computer.

Patrick: Yeah. And so this is, you know, not live fire. This is there are no consequences in the physical universe for you running this simulation and your cloud fleet or similar, which yeah, it was a concession by FAANG despite a phrase for them.

But, you know, we we did not have the technology available to simulate the universe in real life. And so we let real life service our training set, including some amount of regret dev. Oh, yeah. Things actually broke for real people on the training set.

Which, interestingly, creates a very different feeling with regards to software reliability among firms, which are extremely good at global scale, reliable software and many other firms that write software. This has come to mean a different thing that it meant back in the day. But once upon a time, Facebook had a phrase that "move fast and break things." And many people in serious places say, the financial industry and governments and similar said, oh, those tech idiots, who are breaking things all the time, for no reason or because they actually wanted to break things. Clearly that will not fly when people's lives are on the line, when money is on the line, etc., etc. But you end up with extremely different paradigms in different places and, you know, to what degree are we relying on the physical universe as a sort of source of input? What is the cycle time between errors happening in the real world and that getting to an actual engineer to look at, what is the number of errors that are required to rise to our attention?

Will: Right. I think the way I think about this is the real world. The physical world is not optional, right? You are. You are eventually going to deploy your software in the real world, or at least I hope you do. And you're eventually going to have lots and lots of users, at least I hope you do. And and so you do always need a way of noticing what is happening there and responding quickly and rolling something back or jumping in and and troubleshooting if necessary.

That, you know, just we all need fire departments, right? Fire departments are very important because no matter how good you are about fire safety, sometimes a fire will happen. But wouldn't it be nice if that were a very rare circumstance? And wouldn't it be nice if this were a really exceptional thing? Wouldn't it be nice if we could make fires happen, you know, 100 times less often by using, you know, extremely flame retardant materials in all of our construction?

That's sort of how I view sophisticated good testing with fault injection and simulation and all the rest of it. We are eventually going to get to the real world. It's going to happen, but I would rather it happen in the real world 1% of the time instead of 100% of the time. And I think that that's going to make for a happier and more productive software development organization.

Patrick: Increasingly, it is not optional to incorporate things—say if you're in finance, you have counterparties to deal with it. Because otherwise you do not get the money. And those counterparties are running computers that are not under your control, but which will intimately influence computers that are under your control. And our set of tools for mocking this magic were developed in software testing, but our set of tools for mocking the behavior of counterparties or computer systems that weren't under our control, were extremely limited, bordering on primitive for most of, let's say, at least ten years. And so one thing that you can do with Antithesis is say, okay, there's a computer, you don't know what it looks like on the inside. You do not control it. The person who runs it does not get signed by the same person who signs their paychecks. But you got to deal with that computer anyway. Okay? Here are some things that computer could do to you. And is the your code robust against that?

Will: That's right. And we try to pursue the sort of principle of worst behavior that I outlined before. Right? So for example, many, many, many of our customers use Amazon Web Services. And so we provide in the simulation a fake Amazon Web Services that you can use and that, you know, your software doesn't even know it's not talking to the real AWS, which is great.

But our fake AWS is an evil AWS, right? It does exactly what it says on the tin, and no more. And so if you have come to rely on, you know, writes to S3, always succeeding, you will quickly discover that sometimes S3 will return a 500 error to you, and you better be prepared to handle that and to retry it atomically. And you know what? Whatever else you need to do.

And this is really good for flushing out, you know, so many bugs are really conceptual bugs rather than software bugs. They're a failure to imagine a particular circumstance happening in the world. And I think that's what we are very good at turning up quickly is oh, it never even occurred to you that this machine might lose communication with this other machine. But guess what? It can. And we're going to find out what happens when it does.

Patrick: And there were failures that would never happen in the life—let's say, you know, the predicted career of a particular engineer at a particular company. So there's no muscle memory for this kind of failure or even, you know, you you personally engineer Bob, are unlikely to experience this failure or observe this failure in your entire 40 plus year engineering career.

However, that does not mean this failure will never happen and the number of failures that are in that weight class, it's individually, you are unlikely to experience any one of those failures. However, you're likely to experience a failure that is in that class. And so let's test out as much of that class as possible every time, to make sure your program is robust against it.

Will: That's right. And often these things are actually very cheap to be robust against. Often it's oh I just need to retry that. Or sometimes the fix is a line of code. But it's man if you didn't have that line of code, you might have your month ruined or worse, right? The thing about finance is the stakes are almost unbounded, when I'm talking to people in the financial services industry, I just have to say Knight Capital.

Right. This is the hedge fund that got literally liquidated, went to zero over a single software bug. And I think in the crypto industry it's even worse. People are I mean, people are rightly very, very jumpy about this stuff.

The CrowdStrike example

Patrick: Or if I can give another example there, last year, CrowdStrike, a single bug by a single engineer at a single company which is not in the financial industry, took down a material portion of all banking services in the United States on Friday.

Will: Great example. And that's a bug that's not even in your software. Right. And that's actually a service that we do provide to some people. We have some customers who are basically, look, my entire company is resting. It's that old xkcd comic, right? It's oh, resting on this one toothpick, which is this open source library.

And I have no idea what it will do and whether it works correctly. And so we end up testing some open source project or some vendor's software on behalf of the person who's consuming that vendor software, which is kind of an interesting dynamic.

Patrick: And that's particularly critical testing. And also I won't say untested in the status quo, but, neither here nor there.

One part of that dynamic is of course, in serious businesses, you have a quote-unquote service level agreement with your vendor that you can get them on the phone when there's an issue. But the, time to detect and the time to resolve or off an order some magnitude more than when you slash your team, your organization controls all the moving parts.

And you can find this in, writeups of, say, military plane crashes. Where, okay, well, you know, one of the parts of resolving this while there's still a real human being in the air whose life is at risk is, you need to get on a conference call with engineers, at the company that built the airframe and, figure it out.

Will: It's actually happened very recently for the F-35. I don't know if you saw that story.

Patrick: Yep, that was exactly what I was thinking of.

Will: Right. Well, and the and the service level agreement can be cold comfort if, you know, you get your money back. Meanwhile you've just booked a $20 million loss because some customers canceled because they're furious that, you know, something went wrong. Usually the SLA doesn't give you credit for that.

Patrick: There's very possible possibly, you know, a Knight Capital kind of incident where a company vanishes overnight. And good news for creditors to the bankruptcy estate: you will divvy up a $2,000 claim for that day's cloud services, some months later.

Will: That's exactly right.

Patrick: Let's dig into the weeds more about here, about how it works, because I saw this technology and I'm fascinated about it.

Finding bugs in Mario

One thing I'm fascinated about, and I know, on the one hand, kind of a marketing stunt, given the kinds of people who get into software development, on the other hand, it's awesome. Let's talk about it anyhow, you point to this, you know, infrastructure that you've created at Mario and find novel bugs in Mario. How does that even work?

Finding bugs in Mario

Will: Yeah. So it really, I think, just speaks to the sheer power of a little bit of randomization and then a lot of smarts. So the thing that's sort of philosophically interesting about randomness, this is going to sound a little paradoxical, but pure randomness isn't very random. Right. If I just flip a coin every time I need to make a decision, the resulting distribution has a very, very predictable shape.

It's binomial distribution. And, you know, trends towards the Gaussian as number of coin flips approaches infinity. That's one of an infinite class of possible distributions. Right. And it's not even a very interesting one. It's exponentially unlikely to get really, really positive or really, really negative. And so just randomizing what you do doesn't actually let you get very far into software.

What you need to do to get very far into software is a much trickier, more sneaky, exploration of the software using randomness sometimes and using structure sometimes. And then the thing that can give you a super exponential boost to your efficiency at doing this all is remembering where you've gotten and, and retrying from points that are fruitful.

So this is where the time machine comes in. People think that a time machine is mostly useful in the debugging side, but the time machine is also very useful on the finding the bugs and getting deeper into a program side. Let's use Mario as an example here. So level one of Mario, right? Imagine I'm playing Mario with a fuzzer. I'm playing with AFL. People have actually done the stunt.

You know, I run into that first Goomba and let's say I've got a 1 in 8 chance of just random button mashing, getting over that Goomba and getting to the other side of him. That's great. One and eight. Our computer can eat that for breakfast, right? It's going to find out really fast.

But then there's another Goomba and there's a 1 in 8 chance of getting passed in. Now I'm 1 in 64 and then there's a pit I have to jump over. That's another one in eight, let's say. And in order to get to the end of Mario, there's hundreds and hundreds and hundreds of such obstacles. And so when I multiply together, the low probability of getting past each of these obstacles with randomness alone, the result is infinitesimally small. I'm never going to beat Mario with a fuzzer this way, right? The thing that helps, though, is if I can notice that I had gotten past one particular obstacle and I can remember what I did to get over that obstacle, then I don't have to rewind to before that obstacle. I can just pick up from where I left off, and that lets me chop terms off this very long product.

And it means that I can get deeper into the software really fast. Now, you anybody not even into the SNES, could do that with Nintendo, because a Nintendo emulator is already a deterministic simulation of a Nintendo device, right? That's because speedrunners use them and tool assisted speedruns use them, and they have to be frame perfect and so on.

But we've just talked about all the ways in which the real world is not deterministic. It's not like that. And so that strategy for exponentially increasing the speed at which I find bugs could not possibly work for real world software unless I have a magical simulation, right? A deterministic simulation of the world which I can rewind and get back to any moment I care about.

So what that means now is I can take your software or some big bank software or whatever, and I can put it in there and I can run stuff. I can just try stuff. Not necessarily until I see a bug, but until I see something different. Right? Until I see something that my machine learning model says, oh, that's interesting. We haven't seen that yet. And then I can remember the exact sequence of steps that got me to that moment. And I can run hundreds more trials from that point, having, like, saved my game at that moment, basically. And this gives me an exponential speedup in getting deeper and deeper into their code, provided that I can actually recreate everything perfectly leading up to that moment. And that's sort of the magic of the system we've built that we can do that.

Patrick: One of the things I love about Mario is the proof of work. You mentioned tool assisted speedrunners. This is a community I find fascinating because I think objectively these people are extremely underemployed software engineers. [Patrick notes: For example, and I think you should understand this more as an art project and feat of strength than anything else, someone attacked Pokemon in such a way that you could mine Bitcoin with a GameBoy.]

But we spent what appears to be minimally millions of hours of effort distributed over a group of, underemployed software engineers, trying to figure out all the ways that Mario or the N64 Zelda game or whatever, works in ways that are not anticipated by the global knowledge base about that system. And they fight like hell for tiny, tiny research results and oh, there is a skip in level three where if you do this particular frame perfect button sequence, at this particular moment, you get three milliseconds off the global Best Ever speedrun. For level thre

e. And the thing that Mario is a proof of work for is, yes, there are 1,000,000 hours of, well, there's many, many millions of hours and into Mario, but let's say there's 1,000,000 hours that is directly impacted the scientific literature created by these underemployed software engineers.

For Mario, computer knows none of that. Computer just has Mario in front of it. Go.

And it will start discovering things that, we put a lot of time by very smart people who are extremely, extremely passionate about level three. And it finds novel bugs and level three immediately after you turn it on.

Will: And the and the sort of mic drop moment when we, when we do a Mario based demo, which we've done once or twice, is then I take a ROM hack, right? I take a fanmade Mario map that nobody's ever seen before, and you feed that to the system and it just, it just goes and it and it does the same thing again.

Property-based vs conventional testing

And that makes a very important point, which is conventional tests are incredibly brittle and flaky, because anything you change about the system can sort of change incidental things about how the test runs, which can then lead the test to report a false positive or false negative, which is very, very dangerous and very time consuming and consumes a lot of effort and maintenance and so on.

When you have property based tests, though, when you have these more loosely specified assertions that particular invariants should always be met or whatever, those are much less likely to change because the specification of software, the interface of software changes a lot more slowly than the implementation does. And so the really cool thing about a system like Antithesis is if you say to me, hey, Will, my software should never lose data, I can check that property on your current software and on all new versions of your software without doing any new work per new version of your software. And that is kind of a game changer as far as human effort goes.

Patrick: Yeah. Also, I think, we're experiencing this different ways in different places and at different rates. But we're on the precipice of the the rate of change of software development, the raw number of commits that a particular organization produces, going, exponentially might not even be the right word. Much, much higher than our experience to date. Caused due to, computer systems being able to not for the first time, but for the first time in the way that we think about this, write their own computer code, basically. And so the I've been programming with Claude and Cursor for the last month, and the cool kids got to it about eight months ago. But yet the majority of capitalism has not yet come down to this is definitely the future, but this is definitely the future.

And in that future that we will arrive at very shortly, there going to be, bugs discovered by computer code, which causes remediations written against those bugs where a human engineer will be aware, in some vague sense, that that remediation is happening in the same fashion that Congress is aware, in some vague sense, that, the banks are working about money laundering today. But Congress doesn't know that Steve at this particular bank is busting this particular person for money laundering, because that would be crazy.

And given that this pace of iteration is going to pick up massively, and that we as humans supervising the system want to know that, hey, if we're making it picking up 10,000 times more changes to the software system on any given Tuesday than we were on Tuesday, 12 months ago, am I still as robust as I was 12 months ago?

And technologies, like Antithesis are part of the way in which, this, you know, huge fleet of currently junior software developers, but maybe they're not junior software developers 12 months from now. How can we know that that huge fleet is not compromised and guarantees that we make about our systems?

The future of AI-assisted development

Will: That's exactly right. And I basically think that really good software verification techniques, whether it's, you know, whether it's testing systems like Antithesis or whether it's formal verification systems like Amazon's looking into whether, you know, whatever the answer ends up being, I think that that is actually going to be one of the key enabling technologies of the revolution you're describing, because otherwise you get the problem of lemons and everybody gets too scared and paranoid to go all in on this.