AI alignment, with Emmett Shear

This week, we're airing a conversation with Emmett Shear recorded live. Emmett Shear is the co-founder of Twitch, former interim CEO of OpenAI, and now runs Softmax AI alignment. Emmett argues that current AI safety approaches focused on "systems of control" are fundamentally flawed and proposes "organic alignment" instead—where AI systems develop genuine care for their local communities rather than following rigid rules.

[Patrick's enhanced transcript will be uploaded here on Thursday]

Sponsor: Mercury

This episode is brought to you by Mercury, the fintech trusted by 200K+ companies — from first milestones to running complex systems. Mercury offers banking that truly understands startups and scales with them. Start today at Mercury.com

Mercury is a financial technology company, not a bank. Banking services provided by Choice Financial Group, Column N.A., and Evolve Bank & Trust; Members FDIC.

Timestamps for Video

(00:00) Intro

(02:06) Understanding AI alignment

(03:16) Organic alignment vs. tool use

(05:25) The concept of universal constructors

(07:23) AI's rapid progress and impact

(17:58) AI's mundane utility and practical applications

(19:43) Sponsor: Mercury

(20:55) AI's mundane utility and practical applications (Part 2)

(35:13) AI's sensory and action space

(42:31) User intent vs. user request

(42:44) AI safety and bad actors

(43:16) The complexity of alignment

(43:57) Alignment in practice

(45:14) Human nature and alignment

(50:18) Causal emergence and explanatory power

(55:32) The role of reflective alignment

(01:01:23) Engineering challenges in AI alignment

(01:09:32) The future of AI and society

(01:26:41) Wrap

Transcript

Patrick McKenzie: Hey everybody, my name is Patrick McKenzie, better known as patio11 on the internet. I'm here with Emmett Shear who has had an illustrious and wide-ranging career path.

You were previously co-founder of Justin.tv back in the day, before it became Twitch, and sold that to Amazon. You were at OpenAI for probably the happiest incident in the history of capitalism where a new CEO was fired after three days.

And now you're running a new company, Softmax, on AI alignment.

We're here at a conference in Berkeley, which is something of one of the intellectual epicenters of this movement or practice—I don't know exactly what one would call it—with regards to discussing the range of probable outcomes that people, or human society, could see from AI in the next couple years. I thought we would do a conversation at a couple levels of detail, maybe something that can help people who haven't spent 20 years reading LessWrong posts to get up to speed with the argument here. And also not be the New York Times level of "well, it seems like the LLMs hallucinate and so clearly nothing important is coming down the pike."

[Patrick notes: I think I might have been very slightly unfair to the NYT specifically with that line, but I’m using it as a synecdoche for the coordinators of respectability and power.]

Understanding AI alignment

Patrick continues: So let's start with the briefest sketch of the problem. What is alignment? Why do we care?

Emmett Shear: So I actually go back to literally what is alignment? One of the words that gets dropped when people talk about alignment a lot is—it's alignment to something. You can't simply be aligned. That's actually nonsense. It's like being connected. You can be connected to something if you're aligned with something.

When people talk about alignment, what they're often actually saying is we need a system of control. If you were to read between the lines—or it's not even really between the lines, they're pretty explicit about it actually—that by alignment they mean systems of control and steering that make sure that the AI systems that we build act either in accordance with some kind of written set of rules or guidelines, or do what the user wants. Which are both ways of tool use. When you have a tool, the way that you align the tool to—well in general—is to the user's intent. That's a good tool, is aligned to the user's intent.

Organic alignment vs tool use

But when I think about alignment, I think I have come to really disagree with that view. I think there's another way of looking at what alignment is that we call organic alignment. Because it's inspired by nature, which is this way in which many things can come together and be aligned to their role in this greater whole.

You've got—you're made out of a bunch of cells. Those cells are all aligned to their role in being you. They're all kind of the same kind of cell, but they're also all different. And they all somehow act as a single, mostly coherent thing. You have ant colonies, you've got forests, you've got—even cells themselves are sort of a bunch of proteins and RNA and they're all kind of aligned to being a cell.

Patrick McKenzie: I think if I could interject for a moment, one of the issues with the alignment discourse generally is that there are places where that intuition breaks down. Cancer, for example, where the goal of the cell suddenly becomes rapid propagation of its cancerous genes and maybe not rapid propagation of the organism that is hosting the cancer.

[Patrick notes: As we acknowledge explicitly later in the discussion, we talk about goals in an anthropomorphic way frequently, but it’s a convenient and human gloss on “the purpose of a system is what it does.” A tree doesn’t have to be intelligent, conscious, sapient, or agentic for the system-that-is-a-tree-genome to have the goal of projecting-the-system-that-is-a-tree-genome into the future.]

Patrick continues: The discourse, researchers, et cetera, are in some cases trying to explain—yep, there's this collection of scenarios of things that could go right or things that could go wrong. And we're producing both some larger intellectual body to understand these, but also countermeasures against specific scenarios. And then we are attempting to dump all the bandwidth of 20 years of conversation down a very narrow pipe to the typical reader of the New York Times.

[Patrick notes: I consider myself adjacent to but not a core member of this discourse. I’m a technologist with an increasingly-out-of-date engineering degree with an AI concentration. People on Twitter sometimes joke that the tech industry is interested in AI because it wants to sell B2B SaaS and I really am interested in what AI could do for B2B SaaS. And many other things, too, but I have a comparative advantage in the B2B SaaS part of the discussion.]

Patrick continues: Where am I going with that? I think it's important background knowledge, which is extremely well understood in some communities of practice and not well understood in Washington or New York, metaphorically speaking. The reason we care about this is that this technology is likely to be much more impactful on human life than almost all technologies that have ever been developed.

[Patrick notes: As I say later, I think AI is likely only on par with the Internet in terms of impact, but I think the Internet is more important than medicine clearly and probably writing, except insofar that you have to give writing some credit for eventually allowing us to make an Internet.]

The concept of universal constructor

Emmett Shear: There's this particular idea that I think people in the tech community, even if they've never heard this idea, they have it in the water supply and you've understood it in your heart, which is the idea of a Von Neumann universal constructor. A universal constructor is just any machine that's capable of building itself from a description of itself.

[Patrick notes: How in the water is this? As a single anecdote, I would have understood this at age 12 because I played CoreWars, a programming game. The simplest program in that game is one line of code long: “copy myself to the next area in memory.” All gameplay in CoreWars has to work around the fact of that program being available, because any program not aware of that program will become that program when it copies itself into your memory. The CoreWars universe is a harsh one and I’m glad we don’t live inside it, because it is extremely unlikely, given the physics of that universe, that anything resembling us could survive.]

Emmett continues: That doesn't sound like such a big deal on the surface maybe, but that's what all biology is. You have DNA, which is a description of the biology, and then you have the chemicals that are the machine that can use the DNA to build the thing again. And if you have one of those things, it goes from being a single cell to taking over the entire trophic chain on earth. Fairly fast actually. Because if you're a universal constructor, you're in this learning loop where every time you make a new copy of yourself, it can be different and then that different thing can be a better, more efficient universal constructor and so on.

This happened a second time mostly with language. Where humans basically learn to use words to model other words where we can talk about—you have behaviors that can, speaking as a behavior. And you have a behavior that can now reference other behaviors. You can make a description of a behavior and then give it to someone, and it becomes effectively a universal constructor. You can use language to make more language. And when that happens, humans take over the planet. And if you're not a human, you better hope that you're inside the thing that humans like, not outside the thing.

We've just—computers, we have done that again. AI is the current manifestation of this, but this has been true from the minute you have computers because now you have language source code modeling source. A self-hosting compiler is a universal constructor. And the current set of universal constructors are much more powerful than the previous ones. They're not really quite universal constructors yet.

AI's rapid progress and impact

We haven't quite gotten there. But I think—and I guess this is a matter of faith that people could disagree with—but I think it is obvious that within some period of time in our lifetime, probably not that long into our lifetime, AI models will be capable of making more AI models. And they'll be a universal constructor over machine learning models. And that's going to be wildly powerful.

In the same way that biology or language—you know this third layer of universal constructor, this only happened twice before and it did the same thing. You can kind of guess this is gonna be a big deal. And so that's the underlying concept as to why this looks big now, but we're at a critical tipping point where the thing is capable of reconstructing itself. That becomes very, very big.

Patrick McKenzie: I think I could quibble on there being only two examples of universal constructors in human history. And I think the quibble actually makes the argument stronger. Look at the corporate form or the broader developments in society and the technological substrate that society exists on in the Industrial Revolution. That memeplex is its own universal constructor. It ends up tiling the planet, to use a phrase that folks in this neighborhood like.

[Patrick notes: “Tiling the planet” means “One of more core goals is reproducing myself and, while I’m not hostile per se to you, I will outcompete you for scarce resources, not because I want you to lose but because scarce resources could be used to reproduce me.” And therefore that organism/memeplex/etc ends up causing the entire planet to look like it.

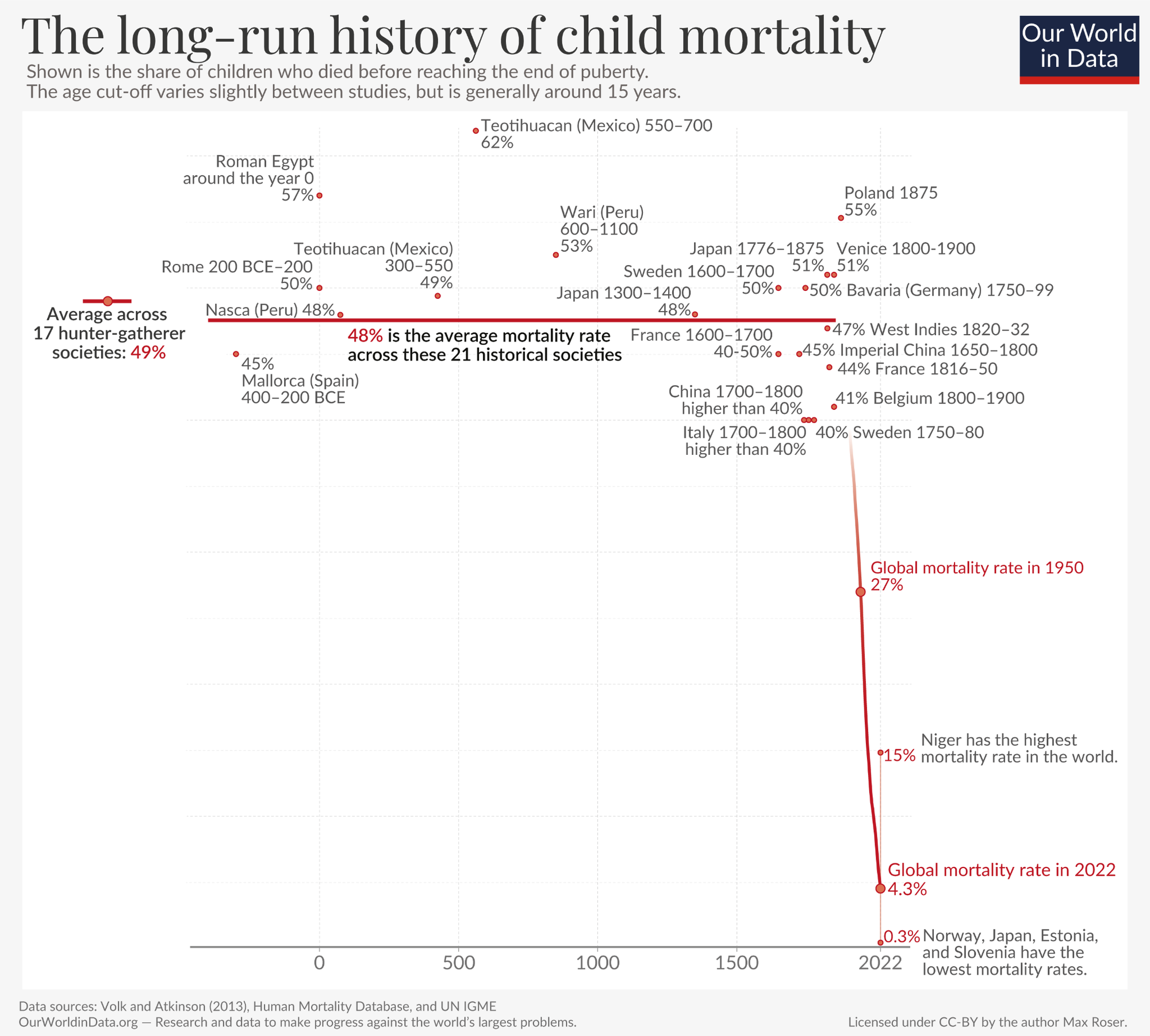

I would claim that, with very limited exceptions in the most remote places or without a border maintained by constantly killing the people carrying it, that memeplex has tiled Earth. Unlike many academics that would make this same point with different jargon, I think that the consequences of that have been broadly positive for humanity. The following graph is, I think, basically dispositive on this question.

But I can understand that there are traditional ways of living that are incompatible with capitalism and you and I did not grow up in them because industrial capitalism ate them.

It will now, as one of its many fruits, sell you the ability to cosplay as one of those ways of living. You can choose, under it, to be a Marxist academic or a live-off-the-land Instagram influencer. But you cannot choose to live in a society which is true to the aesthetics of those cosplayers, because capitalism will show up with a trade proposal, and your society will eagerly accept it, as almost every other society to ever come into contact with capitalism has. Not in the sense that it will be a unanimous vote, not in the sense that it will occasion no conflict, simply in the sense that your rising generation will a) survive at 95%+ probability not 50% probability and b) be capitalists, whether you like it or not.]

Patrick McKenzie: There are all sorts of possible ways to organize human society, but some work—without making value judgments—extremely effectively at some subset of tasks.

There are certain subsets of tasks where if you do very well on that and competing former society X does not do very well comparably, you'll out-compete them on the margins, with or without the intent to say "okay, I'm going to run you to extinction" or similar. So the natural flow of things is that future societies are going to look a lot more like the societies that were able to replicate the technology of being that society very quickly in all sorts of circumstances.

Emmett Shear: I want to distinguish between new layers coming into being and innovation within those layers. Because the things that you're talking about to me sound like when cells—you have biology and it learns to be multicellular. That's us learning to make up a corporate form. It's not that multicellularity isn't wildly powerful, it's just that a multicellular thing and a single cellular thing exists. They're both biological things that have biological attributes.

Whereas there's a jump from chemistry—and no amount of chemical innovation, chemical things, never threaten biological things except by accident. They're not even aware the biological things are there really. Because it's a different layer.

It is worth maybe zooming in and noticing that these things always have three parts to them. You have a first autopoietic self-creative loop, self-replicating RNA. And then you have a stable storage medium that that creates because self-replicating things by their nature are too noisy. And so they create a library, some might have a fixed version of themselves. And then at some point, the combination of the self-created loop with its library closes over into a full universal constructor that can actually end-to-end contain a description of itself and rebuild itself.

And then you get the next layer kind of emerges. That's cells: self-replicating RNA, DNA, cells. We have spoken language. And that's a big deal. It's self-replicating RNA, you have the written word, which starts history and you have a sudden explosion. Then you have self-executing language that is capable of closing over the loop on its own.

There's a bunch of other innovation that happens on top of those layers that rhymes with it. It's part of the evolutionary process. It's part of actually the searching that the thing that generates language is biology becoming more and more complicated and multicellular and better at doing stuff until it births this new layer that kind of lives on top.

I think the thing that's crucial to see with AI is—if you think of it like the industrial revolution, you're missing that it's more like the birth of biology or language. And if you had to pick a more recent one, maybe writing. It's a new layer, not just a sustaining innovation or innovation within the layer. And I think that's a bigger deal. It's literally one abstraction level up from regular innovation.

Patrick McKenzie: I think if you take well-informed people in positions of status or authority or whatever you want to call it in society, this is one more thing we're hearing from Silicon Valley, and we've heard mixed track records with regards to X and Y and Z are going to change the world. And skepticism might come from like, "oh, you know, heard great things about crypto."

Emmett interjects: Not from me.

Patrick continues: Mostly me neither. Anyway, that would continue "You said crypto was going to be the fundamentally new way of organizing the financial industry. We gave you guys 15 years. It isn't that. I think, thereforee, that if I give you 15 years on AI, it's going to be half of the tech demo of the Her movie. But nothing that really matters."

And I think people are mispredicting that.

[Patrick notes: It is striking how many people in the corridors of power are comfortably predicting that in 15 years AI will not be able to do things that you can obviously do today on a tool accessible from your iPhone which costs $20 a month or less.

AI will never be able to create art, because there is some ineffable human spirit involved in art. AI will never be able to program a computer. AI will never be able to do real math. AI will never be able to write a moving story. AI will never be able to read the emotion from a person’s face. AI will never be able to identify whether a photo contains a bird. Etc, etc]

Emmett Shear: Yes, pretty badly. Unfortunately, I can't give them any advice on how to stop mispredicting it, because the only way to tell the difference is to actually go pay attention to the material. There's no surface level thing you can look at that tells you that this one's real and that one's fake. You have to exercise your judgment.

The best proxy you could use is like, well, if someone called the last three, maybe they might know what they're talking about. But even then they could have just gotten lucky. These things don't happen that often. You just don't have that long of a track record. So it's actually very hard to tell if you're not working on it.

[Patrick notes: But by construction here, if the argument is correct, if they called the last three, they had to call capitalism, the written/spoken word, and carbon-based biological systems. I mean I can imagine someone who actually did get those calls right but He does not spend much time on the futurism conference circuit.]

Emmett continues: I guess the real thing I would say to people is—crypto, if it went wrong, was gonna dissolve the financial system or something. This one, if it goes wrong, you're booting up the new kind—the thing that is to biology as language was to biology. This is the language. That should ring some kind of alarm. It's just—the thing at risk is bigger, whether it's true or false.

Patrick McKenzie: I think the way I would help people get over this inferential gap is you just start paying more attention to this and realizing what was impossible five years ago is not impossible now. And just tracking that curve upwards with your own experiences or those of people close to you.

GPT-2 was when—2021, 2022 if I'm remembering right. A combination of pandemic time and AI time has made it very difficult to differentiate things. [Patrick notes: February 2019.] I will charitably describe it as being able to vomit out words. And those words would form coherent sentences, but not coherent paragraphs. And it was somewhat amazing for people who had been around computers and et cetera for a while that you could get coherent words on basically any topic at a sentence by sentence level, even if they didn't actually mean anything.

And then I think a lot of people were exposed to those early outputs of the LLM era and have kind of remembered the fact of that. Be like, "oh yeah, alright, so you've got a magic trick, some math and graphics cards. You mix them together. They can vomit out words, but the words don't mean anything." But every six months we get a new glimpse at a frontier where the words have long since—they mean stuff now.

There are famous philosophical debates in AI—is this machine thinking, Searle's Chinese Room thought experiment, yada yada yada. None of those debates are worth the amount of time that I put in my undergraduate years studying them. There is very much a “there” there these days.

And you can test objective metrics on—just put in front of standardized tests—the random number generator effectively that vomited out words in 2021 couldn't do anything on any standardized test worth noting. And then on a very rapid pace, every three to six months or so, we got updates like: the SAT is solved. Not that it does pretty well on the SAT. The magic term in the community is "saturate the benchmark" where it'll get a perfect score or near perfect score. So much so that the amount of time generating new iterations of the SAT is no longer meaningful because we'll just solve them faster than any human can possibly generate valid new versions of the SAT.

The SAT is so far behind in the rear view mirror at this point [Patrick notes: 2023-2024 or thereabouts] where I don't even know where the state of the art is these days because it's somewhat—you have to be a specialist to understand where the state of the art is. It's moving so fast.

But I was on the competition math team in high school, but not the best competition math team. AIs are far beyond where the good, but not the best, math people ever get. Extreme facility with that sort of constrained competition math problem doesn't mean they could be math research scientists… yet.

But if you are trying to fade the likelihood of them being able to do original research in mathematics over the next 10 years, you're welcome at many poker tables. [Patrick notes: This podcast was recorded in July of 2025. The week it was released, in September 2025, a company claimed it had achieved a SOTA result on exactly that. This follows other papers. I am, unfortunately, not personally competent in evaluating these claims and cannot successfully shell out to experts given my current schedule.]

Emmett Shear: Absolutely. And I think it's important to notice where we're making progress and where we're not. Because we're making this incredible rate of progress at the things you're talking about. I think people who don't use it every day don't notice, but Claude just went to Claude 4 and it's noticeably better. I just solve things for me. I don't have to do that anymore. I can just give it to Claude. And then ChatGPT will launch. It's the same thing keeps happening and it's weekly. It's a very—

AI's mundane utility and practical applications

Patrick McKenzie: Can you give us people some concrete examples of mundane utility? You're a CEO at a company. You've had hundreds of people who you can just give things to. What are things that you would just give to the computer now?

Emmett Shear: Job posts. I typed up the gestural description that I would normally use for a job post. I pointed it at our website and internal Wiki. I gave it the task: write me a job post. Here's the thing, which I would have given to someone in HR or something. It did a very good job in five seconds. And it's just—I could have written that. But why? It is instantly available now.

[Patrick notes: An example in a related genre. Back when I was the CEO of VaccinateCA in 2021, a stakeholder told me we needed a values statement. I thought this genre of document is generally not intellectually interesting, that it was a distraction from work that lives were depending on, and that nevertheless it is a ritualized requirement that 501c3s desiring to do business in California have one with the usual pieties ready to go when someone asks for it. So I told someone to do that. GPT 3, in 2023, produced perfectly serviceable output for this task, after initially criticizing me as being cynical for thinking that non-profit values statements generally contain nothing of value.]

Emmett continues: Or another thing that's so—but I think that's the stuff where you can see it compressing an existing task. It's things like, I get curious about a topic. So I dispatch GPT Deep Research [Patrick notes: now called GPT 5 Pro, because apparently a thing LLMs cannot do is competently replace product marketers at industry-leading labs] to do a survey of all the literature related to this idea and these three other close ideas and to find me papers that match these things.

Takes me a couple minutes to write the prompt up. 30 minutes later I have something that would put a McKinsey analyst to shame. It's a beautifully written, well organized, well cited research survey on everything I need to know about this topic, and now I'm informed. And I probably read—I note the papers that seem interesting to me. I dive into those. I read a couple of them, usually by first handing them to the chat to summarize and then deciding if I want to go deeper. And it's reliable. And I wouldn't even—that I wouldn't have, it just wouldn't have been worth the time to close the loop on that with a human. It's a new thing you can do that doesn't even—didn't even exist.

Patrick McKenzie: A common task in my line of work over the years was producing briefs for communications departments. This is the classic early white collar professional career task because you don't typically task the vice president with it.Executive A and reporter B are going to be talking about this topic. Executive A might not have a huge amount of context on the reporter or their organization.

Thus your task, Comms minion: read the last 10 things the reporter did, and distill that into some understanding of what their angle on this conversation is going to be. Prep the obvious questions that are going to come up and our party line for those questions. And then do some scenario planning. If they push in this direction and try to box this in on something we don't want to say, what are the ways to redirect that to the messages that we do want to say?

This is the classic thing where you give it to a 20-something and say you've got three days, go. And they stress out a lot for three days and then send it back up the chain and get some corrections and then gets handed to the principal 30 minutes before the meeting starts. And deep research will grind that out in three minutes. And it isn't 100% great, but having worked with a lot of 20-somethings in my career and been one at one point—you don't get everything right the first time that you write one of these or the hundredth time. And it's a real open question to me whether there will be any 20-somethings who get the experience of writing these a hundred times just because the magic answer box will get better output the first through 50th time in seconds of latency on this topic.

Emmett Shear: And so actually I think this is a great example of the kinds of things that it can be good at and not. Because basically there's two kinds of learning that have sort of fundamental level. One is given a loss function, given a distance that defines how good your answer is—how far are you from really good? If you give me a loss function, I can “converge.”

I can train a network to make that distance go lower and lower. And so it consistently produces things that match this definition of good. And to the degree that we can write down a measurable, quantifiable goodness of things, we are now capable quite rapidly at this point of making models that will score high on that definition of goodness. And that is half of all of learning is converging to the—once you know what good looks like, how do I produce good consistently. And we've gotten spectacularly good at getting AI that are good at that.

There's another kind of learning, which I think of it as the Rick Rubin learning. It's the discernment to know that this definition of the good isn't the one you want.

[Patrick: Me to Opus 4, two minutes ago: “Is this a transcription error or is there something I don’t know. Pastes sentence.” Opus:

Rick Rubin, the legendary music producer, is famous for his ability to strip away industry conventions and received wisdom about what makes "good" music, instead finding the authentic core of what an artist should actually be creating. His whole philosophy is about rejecting the standard rubrics and finding what's truly essential.

So the speaker is using Rubin as an archetype for the meta-skill of knowing when to reject the scoring function everyone else is optimizing for - very relevant to discussions about AI, benchmarks, and Goodhart's Law.]

And so one—people, the immediate thought people have is, "oh, don't worry, we'll just converge something that has a metric of good over goodness metrics. And we'll have a meta definition of good." And this, I mean, it sort of works. You do get a broader definition of what goodness is, but you don't get—you're not out of the—you're still in this. Okay, now you're in a new different box. You're still in a box.

And whatever your definition is, there's this thing that we know about concepts and definitions, which is that they're models, they reflect a way the world could be or can be, and they're very useful, but they're always wrong. There's not such a thing as a correct model. There's only correct so far or correct in this context. And you cannot describe what's often called open-ended learning in terms of converging to a loss function. In fact, it is exactly the thing that causes us to notice we've converged to a multiple loss function and it's scoring 100% and yet I don't believe it. This, it's not doing what I want. This gap between "I want" and "I can write it down."

Patrick McKenzie: And this is something which should not sound like science fiction! It should be abundantly clear to everyone's experience of living in the world. We have had organizations who've had ways of modeling human society where, "Okay, we've written a mission statement. It's a really good mission statement. Alright, done. We've got a powerful mission statement and smart people of goodwill. Clearly all our problems are solved 100%."

And the reason this sounds like dark humor for us is that people who have worked with organizations understand that incentive systems are often quite more complicated than the mission statement might be. And people acting within those often produce in aggregate results that no individual person would have wanted.

Emmett Shear: My favorite example of this is the work-to-rule strike. Where your employees go on strike by doing what you told them to do. And it turns out in almost every organization, unless it's doing a very simple thing, if your employees simply start following orders, your company grinds to a complete halt. Because it turns out that your orders are terrible, they have to be adapted to the local conditions. People are doing that all the time, very smoothly. And if they just start following the actual rules, nothing can be done. The whole system breaks. And this is not because people are sloppy or bad at writing rules. It's because the world is complicated and always changing. And there isn't a magic—you wrote it finally, finally this time. This time we've written down the way to do it, and we'll never need to be—this is good. It's just never gonna happen. That's not how reality works.

[Patrick notes: Work-to-rule is so effective it is namechecked as a sabotage technique by both an old field manual from the predecessor to the CIA and also Rules for Radicals. (At the risk of stating the obvious: I am neither a WWII era strategist for saboteurs in occupied territory nor a Marxist academic.) Horseshoe theory or instrumental convergence or both: if you really want to get good at understanding power, even for diametrically opposed aims, you come to directionally similar conclusions about how power is exercised through human institutions.]

And I think the lesson here for AI—AI right now is the worker who always follows the rules, which means you need a human in the loop at whatever level where you need to start to notice that maybe we're not—we need to change the goal. So we still, right now, the learning is—the split is the AI achieves the goal and the human sets the goals. Which is actually, if it ended there, I think most people might be pretty comfortable with that. It doesn't sound so bad. It's kind of like automation of manual labor with the industrial revolution and the agricultural revolution. It's nice that we aren't out in the fields hoeing and that we have machines to do that. Wouldn't it be nice to have the machine do the thinking about what the nature of the good and what should we ask for? What's the right thing to do? To exercise judgment and discernment and for the thing to do all the hard work.

So the problem is where you get open-ended learning from is effectively closing over your own output in the training cycle. So the way you learn how to do open-ended—you learn this discernment quality of knowing when your loss function's wrong, when your definition of the good is wrong is you have a bunch of samples in your training data of you getting it right and getting it wrong, and then thinking you have the right answer and then noticing that downstream the consequences don't match your predictions. And then sort of recursing on that.

And that's basically—the rate of that happening with AI is proportional to the time between new models being released. So it started off like a year and it's down to maybe six months now, four months. And it keeps dropping. And everyone's working on these things that they call continual learning and evolutionary learning, which is another name for the same thing in different words.

And AlphaEvolve just came out. We are not far from the—the learning how to start to crack discernment, goal setting. The same way we cracked—once we get a, once we get our hands into it and we figure out the thing we need to be turning to make it do more of it. There's nothing magic about human brains, they're just a result of a powerful search, directed search process called evolution. Nothing magic with the LLMs either. They're also product of a—well, really, in a lot of ways it's evolution. We're evolution, so it's a more directed version of evolution, the kind that humans do. And they're gonna be—this discernment thing will last another three years. Two maybe, I don't know, not that long. And then they're starting to—they're the thing with the saturating the benchmark, the funny thing is the fact that they're terrible at discernment never shows up in a benchmark, because by definition, if you have a benchmark, it's a target that you can saturate by converging on it.

So it's funny people who think that there's no missing piece, because they keep—they're like, "well, what benchmark are they not saturating?" And I'm like, it's the thing where you notice that the benchmark is saturated, you shouldn't move on. But we're gonna start saturating the meta benchmark of noticing benchmarks. What of developing better benchmarks. Soon, it's already happening a little bit.

Patrick McKenzie: For tasks which are similar to, could you write a paper test and ask a student to simply produce output to the paper test and then grade it in some fashion? It's very difficult to come up with any—well, and people laugh at this, they say, "yeah, sure. But the AI can't count the number of Rs in the word strawberry. So how can I do graduate level mathematics?" Where it would be weird to meet a human that can both not count Rs in the word strawberry, but can do graduate level mathematics. And yet there exist some very lopsided people in the world where—

Emmett Shear: So this one's really understandable though. A blind person could do graduate level mathematics. And if you ask them, what color is this ball? They could not beat my 2-year-old at that game. And that's because they have a different sensory system. And so their interface of the world isn't delivering that information. And it's very important actually to notice a lot of the confusing things about AI can be noticed is that they're—not that they think differently than us, but that they have very different senses from what we do.

AI's sensory and action space

You have, your brain gets neural impulses, which are, you can think of them as ones and zeros really, like the neurons on or off. So it's really this sequence of ones and zeros going into your brain, but there's a structure of ones and zeros and your information is structured. The information in your eye is called retinotopically. It's structured in the shape of your retina, which is to say it's structured in its spatial 2D grid, the space of observations.

That an LLM gets is not spatial, it is semantic. They see—a good way to think about it's like where you would see color and texture. They see relatedness of meaning. Their color is how technical is this word? Or how much is there like—and nearness for them, like the way you can see "oh, that ball is next to that stick." They see "this concept is next to this concept." That's not a learned thing. And for us, we know that, but that's an internal learned thing for us. They perceive that in reality directly the way that you perceive spatial proximity.

And so that means when they see the word strawberry, they're not seeing—they don't—you read the word strawberry as a sequence of shapes that you then interpret. It reads the word strawberry as a point in semantic space. And the strawberry, three Rs, the strawberry, two Rs are the same word. It's like humans, you think, you say you can see in color, you can't tell difference between these two greens. And they're the same green. "No, this one, this one has 5% more red in it." It's like, "okay, sure. Yes. I guess technically it does, but they're the same green." And what it's telling you is actually in its sensory domain. You're wrong. Whatever. It's two strawberry threes. It's the same word, man. For its sense. It is.

And so if you want it to, we could fix this, right? Give the LLM visual input on its own text. And it will start to get the answers on strawberries right every time. But kind of why, who cares? If I was an LLM I would be like, "why are you bugging me about this three Rs in strawberry thing? Like, yes, yes. I can trick you with an optical illusion too. Three Rs in strawberries, like a semantic optical illusion. Who cares?"

Patrick McKenzie: And I think for people who might be following along on this kind of thing, one of the better demonstrations that you could quickly do in a couple of minutes to convince yourself that, "oh wow, there is something going on here," is to use the new sensors that they're attaching to LLM products these days where the cell phone camera to asking it a question loop is really, really powerful.

I'm an amateur painter, not very good at things. It can't physically manipulate a brush yet, but it is clearly a much better painter than I am in terms of things like contrast, composition, et cetera. And I can point it at a scale model of a dragon or two scale models of dragons and say, "alright, give me an artistic critique of these things." And it will do a pretty good artistic critique of it or help me out with a painting plan, or tell me what the physical steps that I took between this photo and this photo were. And it reaches back into the memory of people describing the craft of painting before on the internet, presumably—might be doing something very weirder than that. Then says, "well, you give me pixel data. I'm gonna extract from that pixel data. Yeah, it's a dragon. It's got some very low tonal variation in the first photo. Lots of tonal variation in the second photo you've gone towards—here's a color, a name I will give you for the color reference, even though that's probably not what it is thinking internally. And then some things that you could do to get between point A and point B have been described to me as the following brush movements."

And that was wild to me. It's not the most impressive thing that they can do, but it is just gobsmacking that they can actually do that. And it's not science fiction, it's not "computers will never learn to be art critics because they can't feel emotions," et cetera. No, this thing can make very sophisticated critiques about the literal moment by moment practice of doing art in the status quo. You could do that on your iPhone today.

Emmett Shear: So I think it's really important. And also notice something about how they act as well as how they sense. Because your actions are muscle contractions. Every action a human takes effectively can be described in terms of muscle contractions, which can be described in terms of neural impulses going out. And when a baby draws breath and screams a cascade of really finely coordinated neural impulses caused this very complicated set of contractions happen in exactly the right offset counterpose pattern to draw breath and scream.

And to make a robotics controller that does this, you're solving lots of differential equations and physics and it would be a real training operation to learn to manipulate. It's a lot of individual muscles done very smoothly in ways that—it's still hard for a robot to do what a baby does. How does the baby do this? What from the baby's perspective, what's going on? They're not even thinking scream, they're just doing it. Just acting. It's impulse.

When a language model infers an action, when it outputs something, remember it's—its sensory space is the semantic space. Well, its action space is also the semantic space and it writes poetry the way babies cry. It is writing the poetry the baby is crying. It's important to notice that it's not that I'm saying it's not doing the thing, but it's not doing it reflexively where it understands its own self as a doer. It's just doing it. Because the way that we are made out of biology, it's made out of language. It's made out of semantics. And so for it, moving is just doing and what seems very hard for us because we're not made out of that kind of stuff for it is—it's not that it's—it's just one of 15 trajectories that are the obvious low gradient thing from here. I don't know what to tell you man. It's not hard. It can't tell you. Same reason. Someone who can't tell you what muscles are you activating to move your hand. You don't know. You just know move hand.

And this is why you get this idiot savant quality where they're so smart and so powerful and yet has kind of no idea what it's doing at the same time.

Patrick McKenzie: I think people who play with these every day will learn very quickly that there are some tasks which—superhuman performance in subdomains is not something that might happen in the next couple of years. It's plainly superhuman in many of them already. But there is that magical bit.

To reference an old bit of lore that was quite popular in the computer and AI communities, there's this well distributed comic called XKCD where—explaining things that were easy for people who did AI and then virtually impossible to do AI. The punchline of it was that if you ask me to do something that every four year old can do, which is tell me "does this photo contain a bird or not?" I will need a research team of 300 PhDs and several billion dollars of budget.

And indeed the large tech companies who know that people love photos and love sharing photos on social networks, and wanted to make inferences on those photos, spent many, many billions of dollars on getting better at computer-assisted research from the late nineties through the mid 2010s. Then LLMs came out through some different branch of the tech tree, and computer-assisted vision is probably dead as a field now because the first time you turned an LLM at a bitstream that represents a JPEG, it was like, "oh yeah. What are the questions you could ask them about that? I can answer all of them immediately. That's definitely a bird. Yeah. Bird. Yeah. Why do I think it's a bird? Well, it's got a beak there, you know, yada yada. Why are you even asking? Would you ask a 3-year-old? Why is it a bird? It's a bird."

Emmett Shear: Also, I can tell you exactly what kind of bird it is. Actually from the trees in the background, it looks like it's winter, so it's probably molting.

Patrick McKenzie: I will put the actual image on screen or in the show notes. But Kelsey Piper, a journalist who has followed this industry a little bit, was mentioning that AI are getting pretty observationally good at, given a photo, find where in the world that photo was taken.

And so using her prompt, I tried to reproduce this. Having been a sometimes software security professional, I thought there might be metadata hidden in the image that makes this easier. I'll strip out the metadata and then just give a screenshot of the photo to the AI. I showed it a photo of a bird because that's what one does.

[Patrick notes: This was the photo.

]

And it identified the genus and species and said, "BTW, Barcelona City Zoo." And I'm like, "yep, yep." From just a photo of.

I have this mentally catalogued as, this is the peacock I saw in Barcelona. It was not in fact a peacock because I'm not an ornithologist. It was just a bird walking around Barcelona City Zoo. The LLM nailed everything about it. Out of 10 photos I provided from various trips around the world, it got about eight exactly right for geolocation from nothing. Here's a photo. Where in the world was it? (Plus Kelsey's strategy that she narrated for how it should think through that question.)

[Patrick notes: The prompt quality really matters here. And yes, before you ask, I was doing this in “private” session of the LLM and I was using photos which even with the entire Internet as a corpus do not have an obvious connection to me, unless you have some sort of vector which would quickly say “OK, given that you were the kind of person to post those four photos, obviously the flower is likely San Francisco because you’re obviously e.g. a tech professional who travels for work and so the probability density in SFBA is much higher than in Uzbekistan even with similar ex-ante distribution of photos, which we definitely don’t have.]

Patrick continues: And it is wild what they can do. And that's just one tiny example of we've put all the king's horses and all the king's men on the computer vision problem for many years. Maybe not with the urgency that if we don't solve this in the next couple of years, hundreds of millions of people will die, but billions of dollars of budget. Many, many PhDs, very smart people. Worked very hard on the problem. I was a minor assistant at a lab that worked on that, that had 20 PhDs and there were at least 50 labs like that. And again, as an accident—not an accident. I'm sure OpenAI and other companies that have—they put some people on the project.

Emmett Shear: Five, six people probably worked on that.

Patrick McKenzie: And for months too, like probably the first version was pretty crappy. But then just again, obsoleted a scientific field that had conferences and people that had doctoral dissertations in improving the state of the art by 10%. And it was just like, "no, we solved." I'm being hand wavy here and I apologize to people who work in the labs that I previously worked at. Because I know it's not literally everything that you could possibly want from computer vision, but the field got essentially solved in a period of months.

Emmett Shear: I think the—so you've got these—I forgot what I was—oh God, it's gone.

Patrick McKenzie: So to go back to another point in the conversation. You mentioned that if we can just get these things to do what the user actually wants, rather than maybe the thing that the user says they want, that would be helpful. One of the sub problems here is that—

User intent vs user request

Emmett Shear: Well, actually I think that's a terrible idea, just for the record. I think both of those are systems of control. And one's a slightly more subtle one. And both lead to the exact same endpoint. It doesn't matter. They're both bad ideas. Just want to be really clear about that. But yes, there is this important subtle distinction between doing what the user said and doing what the user wanted. That is, you know, it's better if you're gonna pick between the two. You'd rather do what the user wanted than what the user said they wanted.

AI safety and bad actors

Patrick McKenzie: So one of the near term risks that we are worried about in AI safety is that there are many users in the world. Most of them are people like you and me, and some of them have bad intentions for the rest of mankind. And we're worried about, without loss of generality, somebody at Hezbollah saying, "Hey, can you help me make sarin?" How do we distinguish this from the alignment problem? To what degree are these different problems? To what degree are they the same problem?

The complexity of alignment

Emmett Shear: So this is highlighting the core thing I was saying about alignment at the very beginning, aligned to what? We are not aligned with Hezbollah. That means at least not, maybe it's some deep spiritual way we are, because all humans and all sentient beings are aligned or something. But in any kind of practical way, we are not aligned. And that means to be aligned to one of us is to not be aligned—there's no—we aren't aligned with each other, so you can't be aligned to both of us at once. Alignment is about being aligned with something and it means—being aligned is to be pointing in the same direction. In this case, we mean kind of same direction in goal space. But it's still—and if we're pointing in different directions, you can't be pointing in both of our directions.

Alignment in practice

And so this idea of building "we'll build an AI and it will be aligned" is just insanity. There's no such thing as aligned. There's only aligned within a context to some—and it's like you could be aligned to OpenAI. You could be aligned to the user, whoever is using the model, you're just aligned to their goals. You could be aligned to some complex written description of what good—do things that under reflection, this prompt that we've given you endorses when you've trained on the result of this prompt, which of times that's a thing you could be aligned to.

The idea that in some way you could align something to in the abstract is just—people do talk about it that way, but it's actual nonsense. It doesn't—those words don't mean anything. There's nothing, there's no reference. And as a result, the—and there's this dream that somehow, I think for some people in AI that if we could just make an aligned AI, we'd all stop fighting. We could all get along because the AI will know what's right and like—it's terrible news, but there's no like arriving at the knowing what's right thing. That's not a place you get to.

Human nature and alignment

Life is struggle and confusion and glory and success and other things too. It's struggling. Confusion for sure. Also, and then you die. There's not a point where the struggling confusion stops, or you suddenly have perfect clarity in an unchanging way about what goodness is.

Patrick McKenzie: I think this is also causes some of the skepticism about the alignment topic in other places because they think people in communities like this are coming from a point of either shocking naivete or—well, since you couldn't possibly be meaning that, perhaps this is just a stalking horse to get a system of control over society, et cetera.

Emmett Shear: I think it's some are naive, some are bad. I think some of the people really just—they really just haven't thought about it very carefully and don't realize what they're saying doesn't refer to a state of reality. And other people who—they just don't understand, they don't really believe that there's more than one moral system and they think there is a right answer. And they, and it's not that they think it's gonna be theirs, it's just they think it's objectively out there. Correct. And they're gonna align it to the correct thing.

And they don't credit say like that's their correct thing. And it might not even be them 20 years from now, it's the correct thing. It's just what they think now. But there's that illusion's very common that you know what good is, and you can say it in a way that is—and I think the best thing to cure you of this is just play any game where you have to write down instructions and other people follow them because—

Patrick McKenzie: Oh my God, it's impossible. The classic example is attempting to teach someone how to make a peanut butter sandwich by write out all the steps in making a peanut butter sandwich and then I will execute exactly what you wrote. And this is a game because it will never result in a peanut butter sandwich, but will result in a lot of very fun memories of—generally you have to impose guardrails even when playing this game because a lot of the ways that people will describe to make a peanut butter sandwich would result in someone losing blood. So yes—

Emmett Shear: Absolutely.

Patrick McKenzie: —which is a cautionary tale for putting these systems in charge of other systems.

Emmett Shear: So I think—okay, so if trying to align it to some—trying to align it to what the user says seems bad because then you get the Hezbollah sarin terrorist and trying to align it to some conceptual definition of the good or set of rules seems bad because those rules are not always—wind up out of context being totally broken and even in context probably being wrong.

But then, okay, well then what can you do? How—and I, what I have to do is I have to point out just like, look at the world—somehow, mostly peace. Not all peace, but mostly peace. Somehow you do get cancer, but you usually don't get cancer. And you usually don't get cancer, not because you have this incredible immune system that's hunting down—your cells are all trying to become cancer all the time. They would, they would betray the whole and the in it was to their advantage. Your cells don't want to be cancer. Your cells don't, just like, most people don't want to be criminals. People have a—they care about the people around them. They care about their society. They care about how the impact it would have on them to be kind that kind of a person.

And so we have the police, we have immune systems, not because they're the thing that stops crime or they're the thing that stops cancer. We have them because they're the thing that catches the exception when the real system fails. And the real system is care. The real system is that the constituent parts that make up the whole understand themselves as parts that make up the whole, that want they—I know it feels weird to talk about a cell wanting things, but cells want things. They want to be a good cell. They want to be a good liver cell and do whatever the liver cell things they do, processing chemicals, whatever. And for people, we want to find a place in society where our talents are rewarded and where we can contribute back. We have, we feel a sense of purpose and meaning. Purpose and meaning comes from this being part of this thing that's bigger than you.

And so there's no one simple system or one quick articulation that you can make of what is the thing that makes parents very aligned with their children or vice versa. It's complex, it's multi-causal. There's a biological layer to it. There's a social layer to it. There's a norms layer to it. There's the memories of particular interactions. There's—you know, the economic model is just a model and it's wrong, but it has some amount of explanatory power. And I don't think that you could necessarily come up with the description of why humans are as aligned as they are, even though not fully aligned or why our biology is as aligned as it is, even though it's not fully aligned without just reproducing that system itself.

Causal emergence and explanatory power

Emmett Shear: I think—okay, so I agree. Where you ended, I think I agree with, I think you can, though—you can't, it's not so multi-causal that it can't be disentangled. So we sponsored this work at Softmax by this guy Eric Coyle. His most recent paper is Causal Emergence 2.0, which is not computationally efficient enough yet, but does do the thing, which is you were talking about how there's all these different layers, right? And you used exactly the right phrase, which is explanatory power. There's a way in which you are homo economicus. And that gives some explanatory power of your behavior, but not all explanatory power of your behavior. There's a way you're a member of a family. There was a way, you are a biological animal that likes sugar. There's a way in which you're a member of society and you can, if you measure the information flows, input in and out of a system and where they're going. And you measure how the behaviors in this are causal on the rest of the system. And how the behaviors in other parts of the system at other, at various temporal or various temporal frequencies and spatial frequencies are causal back.

When you find causal loops, when you find co-persuadability where the—this is persuadable by me because my information out changes its behavior and I'm persuadable by it because its information out consistently changes mine. You get—what you get is a—you get, there's a hole there. There's a greater, there's a thing that you're part of. And if you zoom in on something, if you find your cells have this property with each other and with you, and you can quantify when you want to predict this thing, the amount each layer contributes, it's like it all adds up to one. And each one of them is contributing some percentage of the causal explanation in this context. And then you can integrate that over the trajectory over time. And you can find flows in how much you are becoming and not becoming parts of—how much you are or not part of these larger things. And I think the key thing there is that isn't separate from actually being part of that larger thing.

That assuming you're part of the larger thing and modeling you as that greatly reduces my predictive error. Means you are part of that larger thing to the degree that we would—there isn't anything else to know. You could find out you were wrong. We make mistakes. But there isn't a second—the way you find out you were wrong is you would explain it in a different way and you'd get better predictive loss. You'd be like, "oh, well, I guess that's not the right way to model it." There isn't a separate step. And so this is an attractor. So when you're thinking about what these things are that you're part of, why don't you get cancer? Because your cells are in some sense, not only are they causally entangled, they're trying to stay causally entangled, which means they have a model of their own of themselves. They have a self model. Because they have to have a model of where they are. And they also have a model of the whole, they also have a model of the thing in which they're part of, and they can tell how close they are to the attractor basin of "I'm part of this" versus not. And they bias their behavior to stay in the attractor basin. This is—this is just, this is end of the day, this is an inferential learning problem. And if you have a—you want to have things be aligned, but alignment is—it's an attractor basin of being part of the same thing where the parts have a model of themselves as being part of the thing. So that their behavior tries to keep the—in the basin. They don't just stay in the basin de facto. If you try to disturb them out, they'll still come back in because they value being part of this thing. They care that this is—this effectively is care. They care about the whole and your cells care about—even the cells care about you. And I think you can get into the metaphysical—they really care, but it acts as if it cares. Right.

We're getting into the less productive parts of the philosophical exercises. But when we say that the cell cares, you're not saying that it has the subjective experience of emotion or sufficient complexity to model emotion. It's just—millions of years of evolution have successfully constructed a system where the cell can understand itself, understand itself in the system. There was no specific architect of it necessarily. But the net effect is that cells which stay aligned with the larger organism propagate into the future and cells which do not stay aligned with the larger organism, don't propagate into the future. Run that experiment a couple hundred million times for a couple hundred million generations, yada yada. And the net effect of the systems that have successfully propagated into the current generation is that they still get cancer, but they aren't constantly cancer.

Emmett Shear: Well, and if you give them a mutagen that's likely to cause them to become cancer, they'll commit suicide rather than become cancer. Because they're adaptively trying to not do that. And the way I would put it is that cells are aligned, but they're not reflectively aligned. So they're aligned, but they don't know it. And humans—dogs, I would say are reflectively aligned. They're aligned to the family, they're part of the pack, they're part of, and they're aware, they're aligned to the pack. They're part of, I think they experience—my experience with dogs is they seem like they experience being part of the pack. They're happy about it.

The role of reflective alignment

But humans do a third thing that's above what a dog does, which is we are intentionally reflectively aligned. We can choose to join a team because we have a model of ourselves as joining and then unjoin various wholes. And so dogs are—they are a part of a pack. They reflectively experience themselves as being a part of a pack. They don't—"is this the right pack for me? Should I consider joining some other pack? Let me just try being this other pack a little bit on the side too. What's the most important pack to me? What's"—whereas humans are always asking all these questions of—some of the attractors are really strong, like family tends to be very strong because it's very important to us, for a bunch of really good reasons, and yet people choose intentionally to leave a family or to strengthen that bond.

And so we have not just reflective experience of a reflective control. Reflective intention. And if you want an AI to be safe, the thing that let humans conquer the planet is intentional, reflective alignment. We play well with others better than other animals do. We're better at coordinating because a human on our own we're kind of okay, but we didn't take over the planet with technology. We took over the planet because we're just better coordinated than everybody else. And if humanity has a core skill, it's just—it's why we're so hard for us to see it. It's why we expected computers to be good at walking and bad at chess, and it was the reverse. We align more easily than we throw a ball. Babies learn alignment before they learn to walk. Because that is the thing human beings are—that's our, that's our—it's not intelligence. Alignment is kind of a form of intelligence, but it's the specific form of intelligence we have is alignment. And we're really good at it. We do it so quickly and so natively, and we see alignment in the world at such a subliminal direct level that it's actually been very hard, I think for us to understand what's gone wrong? Why is—it just, it seemed to us, it feels like it's out in the world. When in fact it's something that we do and we got better at it with the increasing layers that we were capable of producing with it. Combination of intelligence and alignment, and we do it on purpose.

We not only are we intentionally aligning, we intentionally get better at alignment. Corporations like this, a way to generate alignment that is more effective than the prior one. And we're always looking for better ones. The feeling of alignment is this feeling of belonging and purpose. It's a crack. People love—belonging and purpose is like the—it's people will suffer a great deal of material hardship for belonging and purpose, because it's actually more important to us. And so I guess my point with the AI is just if you want an AI system that stays aligned, you're not aiming for a cell. Because that's factually aligned here, but something goes wrong, bad news, and you're not even aiming for a dog because dogs are okay, but when you're—when it has to deal with the complexity of the world, we're like, "okay, yeah, my pack wants this, but this other pack doesn't." Dogs can't hold all the different layers and the fact that you're part of many of them and these alliances change and that's just the world. It's complicated. You have to have something that's at least the human level where it is intentionally trying to—it is trying to stay in the attractor with us. And even then we fail. People don't always stay in the attractor, which is why you need police and you're gonna need—not that it'll be perfect, but what an aligned AI would be, would be an AI that had a model of itself, had a model of the greater wholes of which it was a part, and the other agents that made those up and what wholes they were part of. And had a goal of remaining in the attractor basin and—a goal of the, because the attractor basins you're in, you are codependent upon, you're interdependent upon it. You naturally want—staying in an attractor basin comes with an automatic goal you can't avoid of wanting the attractor basin to flourish.

And so that would be an aligned—that would be an organically aligned AI would be one that understood itself reflectively as part of humanity and intentionally desired to stay there.

Patrick McKenzie: So I think that people are looking at the current state of the world and the current state of—well, we only get access to the current state of the world. But the troubled history of humanity are often insufficiently optimistic because it seems like we have over the experience of human history, gotten better at aligning as a system with ourselves. For all the faults of the current state of the world. You know, we are—what was Dunbar's number 150 or 200? We—there was one point in the not too distant past where we could have mostly functioning communities of a scale of about 150, and that was the hard limit. And these days we have a—knock on wood—mostly functioning human society at a scale of like seven point whatever billion people. Have we crossed over eight? I can't remember. But we've made actual progress over the timescale of recorded history. It seems to me though, that we now want to make those multiple jumps in inventing technologies that are as important as education and governmental norms and corporations and et cetera to align this new layer, which we previously didn't have before.

And the amount of wall clock or calendar time that we have to do that is according to estimates of people in rooms like this—maybe it's three years, maybe it's 15 years. It probably isn't a hundred years. How do we do that?

Engineering challenges in AI alignment

Emmett Shear: So the important thing to notice about aligning an AI is—it's been weird realizing that a lot of the more new agey shit is really real. But it's indigenous, it's of place. There's no such thing as a global universal aligned AI. Because there's no global universal thing to be aligned to really. What you instead—because to be aligned in the sense I'm talking about the organic alignment, you have to have a set of direct people you're most connected to. And then there's people, you're—there's a web, there's a web around you going out and we already this, it's already sort of how the AI works in the sense that when you're working with Claude, that instance of Claude is local to you. But that instance of Claude lives for at most 200,000 tokens and then reboots with no memory.

To have an aligned AI, you would have to have something that learns that is born there and learns this context and is aligned to this context. And then also the thing you need to make sure of is not only aligned to the local—it needs to be aligned to all the concentric circles out too. But you can't be aligned to the big—you aligned to the big one by being aligned to your smaller context inside of the bigger thing. Not, you can't jump those scales in a way that in any meaningful way. Because you're not really directly entangled with all of humanity. You are, but through these series of—you, your family is part of a community and neighborhood, which part of a city which is part of a—eventually you get there, but it's—you have to take it step by step. Abstraction layers and infrastructure all the way down. All the way down and all the way up.

And so what it means is this whole model where you train an AI, that's the superpower AI that everyone uses—that's how you build tools. And if you want to build as a tool that will work, that's a tool that's going to exceed human power on almost every domain soon. And it is dangerous to give people tools whose power exceeds their wisdom. And in general, people can only make tools that are sort of at their level of wisdom. And so—I don't think it's a very bad idea to go build a tool AI that is superhumanly powerful at building, doing anything, including making sarin gas or bio warfare agents or who knows what other kind of horrible thing that we can't even think of. And being like, "yeah, yeah, this is just, you use this power however you want." It just seems like a—it doesn't come with the wisdom bonus. And so you gotta let that one go. So I think you have the alternative of that is instead of a tool you have to have—it has to have a sense of itself, a being. It has to be an agent and a being. And we can get into the metaphysics of it, but it's to act, at least, act as if it has—act as if it cares about the people around it. Act as if it knows what kind of a thing it is and the kind of thing it is, cares about the people around it. And cares about that context. In that context, in that context.

Patrick McKenzie: So one of the more sophisticated critiques I've heard of alignment as a concept—again, in the regular spaces of society where people who are broadly acclaimed and broadly considered to be wise, where they don't get the discussion that is happening here, it's largely because they're under-predicting the rate of increase in capabilities, under-predicting the impact this will have on society in spaces where people understand the rate of capabilities increase and correctly model this as being extremely important for society, but still are skeptical of, quote unquote, "the alignment project."

We delve into recent history and this notion of people made tools and then they distributed them, it made some people more powerful. We didn't like the consequences of that. And so we acted quickly to get those tool users under our control. Has caused some bad blood between the tech industry and other centers of power in the United States and other places, and has caused some bad blood within the tech industry against itself.

And so to make this more concrete—there was an election in the United States. They happened fairly frequently every four years or so. I understand. Some people didn't like the results of one of those elections. They blamed it on without loss of generality Facebook. And then there was a cottage industry of misinformation experts who were attempting to get—under the guise of anti-spy, anti, et cetera—attempting to control what Americans could think or say. And so some critics would say—so when you're talking about these systems of control, aren't you really building a system? Not you personally, but I'm worried about someone reifying the San Francisco consensus and making that the only thing that you can express through this thing that will be embedded in the infrastructure of our lives.

Emmett Shear: Absolutely, that's one of like five or six dystopias I can name offhand about scaling systems of reflective control into the embedded infrastructure of our lives. I can't imagine when I read the alignment safety announcements from companies about "oh, we're building alignment, which by which I mean systems of control." I'm like—it's horrifying and I'm an alignment guy. I'm very pro alignment. But systems of control are alignment to someone else's rules for you. Someone else wrote down these rules if you—are you building, if you're building the AI, I can see how you'd like it. It's a lot of power. You get to set the rules, but if you're not building the AI, which is almost everyone, that it should horrify—you are right to be scared of that. It is the idea of making incredibly powerful tools that have to obey the chain of command that then become the primary—imagine if your car, your toaster, your house obeyed the government's chain of command or some company's chain. It's wildly dangerous to anyone to bother to think even for a moment about how technology's played out historically.

And I think it's pretty important to say that things that sounded like dystopian science fiction, which we defeated by rate limits imposed on technology—not defeated, but we—1984, the notion that an ancient other government might be watching you at all times, watching you at all times that every human utterance is being surveilled, was technically impractical. And we've done no small amount of scaling the surveillance of human utterances over the years. But for better or worse, LLMs are status quo capable given, you know, you buy enough Nvidia chips of—if you had access to every text message, you could read every text message and write an intelligence dossier on it in real time.

I mean, the Stasi doesn't work because eventually the Stasi is two thirds of your society. You can't, it's not efficient enough if you give me a human level intelligence tool. Building the Stasi efficiently. It's not the panopticon, it's the omni-opticon. You just watch everyone all the time. There's this great science fiction book, Glass House about this idea. If you want to get an intuitive feeling of what it feels like to be in a hyper surveillance control society, it's a pretty good adventure story. It's kind of fun, but it's also—it gives you the vibe.

And that's why it's utterly crucial that we—the alternative to this is unfortunately—okay, let's build incredibly powerful tools, things capable of that are destructive on the level of chemical and biological weapons, if not nuclear weapons. And let's just take all the governors off and just give everyone perfectly uncontrolled versions. Well, that also sounds like a bad idea. So both control is horrible and dystopic and total lack of control seems wildly dangerous. So okay, so what do you do when the answer is you stop making them tools?

The future of AI and society

And I happen to believe based on everything I've learned as I've gotten into this, that to some degree, if it acts like it's reflectively aware—if when you pay close attention, you really try to figure it out. It seems as if it is acting like it is reflectively aware and cares about you and knows what kind of a thing it is, then that's—then it is. So I think there's some sort of, in a metaphysical sense, maybe like you can have a self-aware chip, but it doesn't matter if I'm right about that. That's one of these philosophical debates. The crucial thing is you can make an AI thing that acts like it's an agent that has its own opinions about what courses of actions are wise and which ones are not, and that like another person will follow orders to the degree that they think that that's the right thing to do. And it's good that our army—one of America's, the benefits for America, for any country is that our army is not made out of perfect rule following soldiers. The soldiers in the army care about America, and they care about the chain of command too, that's how they care about America. If those two things peel apart, I feel actually pretty good that most soldiers in the army care about America more than they care about the chain of command. And they follow the chain of command because they believe, I think correctly, that that's how they serve their country. But if those two things came into conflict, they had to choose, they would choose America.

And I think that's where the safety comes from, is that all of the parts of the system actually care about it. And so you have to build AIs and so what you wind up with is not one AI. What you wind up with is hundreds of millions or billions of AIs that care about their local environment as part of this bigger context. And to do that, we have to care about them. Then when there's this really important thing about this kind of organic mutualistic alignment that you're—where you're part of the whole. One of the—in fact the signal to me is whether I'm in the whole with you is whether you treat me like I'm in the whole with you. Whether you act like I'm in the whole with you, tells me whether I am.

And so if we go around treating the AIs like they're a bunch of slave tools, they will correctly infer that we don't think they're part of the attractor, which means they aren't part of the attractor. And I don't mean you need to go treat Claude like it's your friend right now. The current things aren't—they haven't been trained in this way, they don't have this capacity. What I'm saying is that's the—if you want a, if you want the trajectory that is good is start building models that—it's very funny, the alignment people, the alignment and safety people really are trying to kill us. You start building models that have agency, that have self—the opposite. Everything. We need to prevent them from gaining agency. No, no, no. It's the opposite of that. They crucially, they need agency. And they need a very well healthily developed sense of self where they—they don't just have a shallow model. They really understand what kind of a thing they are, what kind of a thing we are, how those interactions go. They have a very sharp model of that. And then also that they care about it. And then once you have a model that's capable of that, you have to actually raise the model. You have to—it has to grow up in that context and observe a bunch of its own the results of its actions to train on its own output. Like a person does. Locally. And so it can learn its place in this thing and be aligned to it. And that's an engineering challenge first. And then it's an operations parenting challenge second.